2026 D&A Predictions and Priorities: An IIA Expert Panel

Our much-anticipated Predictions and Priorities webinar is back! Join this panel of IIA Experts on December 9 to get their spin on emerging trends and where data and analytics leaders…

Explore insights from IIA and select content from our research library below. Our content is always independent and driven by our clients’ everyday problems, challenges and initiatives.

Our much-anticipated Predictions and Priorities webinar is back! Join this panel of IIA Experts on December 9 to get their spin on emerging trends and where data and analytics leaders…

IIA Expert Marc Demarest breaks down the core components of IIA’s RBV Framework. Learn how to tailor your value model to your organization’s analytics maturity, stakeholder needs, and use case…

IIA Expert Scott Friesen leads this webinar tackling the age old topic of communicating analytics value. Friesen will share his practical framework for raising the visibility and perceived value of…

Take a practical approach to proving ROI on analytics—read our expert designed eBook for a step-by-step guide on communicating the value of your projects.

Capturing the value of analytics projects is both art and science, and the exercise has become more complex with new techniques like generative AI. In this roundtable discussion facilitated by…

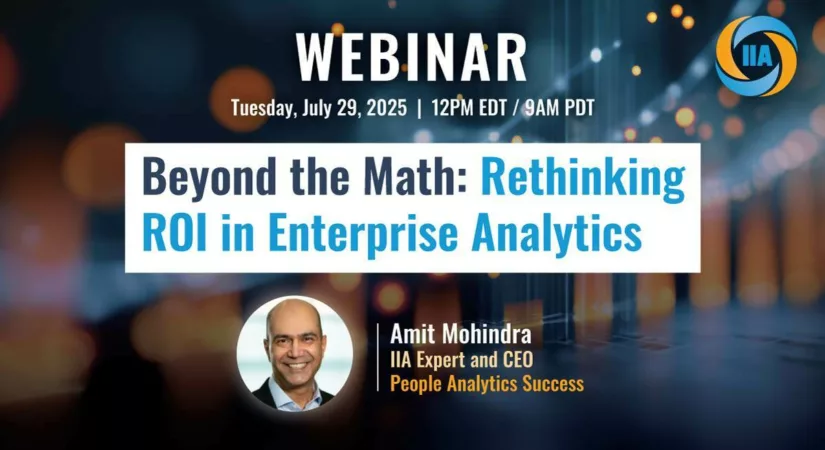

IIA Expert and seasoned analytics leader Amit Mohindra shares how to reframe analytics ROI to reflect not just measurable outcomes, but the relationships that sustain them.

Download this action plan template to plot out your 90-day roadmap and structure your business context, charter, operating model, and delivery plan.

Our latest webinar uncovering what it's like inside an organization undergoing a transition to a federated model featuring Keith Moody, vice president of analytics at Discount Tire

Research & Advisory Network clients have full access to our research library including strategic frameworks, topic deep dives, leading practices from blue-chip companies and brief responses on the most common topics being worked on across the client community. In addition, clients can directly engage with authors through IIA’s Expert Network.

Looking for more? Contact us today about how we can connect you to our Expert Network.