Client Inquiry:

I lead our AI Center of Excellence and partner closely with leaders across analytics, platforms, and AI operations. We have deployed traditional machine learning and large language model applications at scale, and we now see rising demand for agentic AI from functions across the company.

The challenge is that every major vendor is now shipping its own agent builder. We already run predictive and generative workloads on our centralized Google Cloud platform, but Salesforce, ServiceNow, Workday, and others are now offering agent frameworks that sit inside their ecosystems. Internal teams want to build agents wherever they work, and we need a cohesive approach to platform design, governance, and monitoring before this becomes unmanageable.

I need guidance on how to think about a unified agentic AI platform, how to monitor and govern agents that may run in multiple environments, how to handle the overlap with responsible AI requirements, and how other enterprises are structuring the operating model for agent development, oversight, and support.

Expert Takeaways:

1. Centralized platforms support scale, but multi-vendor reality requires a hybrid model

Centralized platforms offer control, consistency, and governance, but no enterprise can realistically contain all agentic development in a single environment. As vendors embed agents directly into their products, teams will expect to use those native capabilities. The practical path is not to force consolidation, but to design a hybrid model that defines what belongs in the central agent platform and what can live at the edge.

The central platform should host high-risk, cross-functional, or deeply integrated agents. Vendor platforms can host narrow agents tied to the workflow of that specific system. The key is establishing selection criteria so a team knows where an agent should be built before development begins.

Key Insights:

- Cross-functional agents with enterprise data access belong in the centralized platform

- Vendor-native agents are appropriate when they remain contained to that system’s data and actions

- Intake criteria should determine the appropriate development environment before work starts

- Hybrid models reduce friction while maintaining oversight

- The role of the Center of Excellence is to define boundaries, not to gatekeep innovation

2. Monitoring agentic AI requires a new operational backbone

Traditional model monitoring does not extend cleanly to agentic systems. Agents can take sequences of actions, call external tools, and branch based on conditions, which means logs are larger, more dynamic, and more complex than standard prediction outputs. A monitoring approach that worked well for predictive and generative models will not scale once agents behave as semi-autonomous workers.

The monitoring layer must capture each step an agent takes, the context behind decisions, and the flow of data across systems. Enterprises will need a unified view even if agents run across multiple platforms. Vendor dashboards help, but they cannot be the entire solution.

Key Insights:

- Monitoring requires step level traceability rather than simple output validation

- Logging must capture both reasoning and action sequences

- A unified monitoring layer is necessary even when agents run in multiple vendor ecosystems

- Vendor dashboards should feed into a central operational view rather than operate in isolation

- Monitoring frameworks used for predictive models rarely scale to agentic complexity without redesign

3. Governance for agents must resolve earlier than governance for models

Agentic AI changes the timing and scope of governance. Traditional governance reviews happen at model release checkpoints, but agentic systems require earlier alignment because they involve tools, actions, and workflows rather than just predictions. Legal, privacy, and security must evaluate what systems an agent touches, what actions it is authorized to take, and how failures will be mitigated.

Governance also must account for the fact that multiple vendors now embed agent builders inside their products. If these are allowed without guardrails, the organization will face a proliferation of agents operating under inconsistent review processes.

Key Insights:

- Governance must evaluate the agent’s toolset, action space, and permissions

- Access control must be designed before development begins

- Vendor embedded agents require the same governance standard as centrally built agents

- Human in the loop expectations must be defined based on risk

- Intake forms should force teams to clarify purpose, data use, and action boundaries

4. Operating models break down without clear ownership and boundaries

As agent adoption accelerates, the biggest risk is not technology but operating model confusion. Teams want autonomy to build, but centralized AI operations are still expected to monitor, support, and troubleshoot any agent that reaches production. Without clearly defined boundaries, AI operations will be overwhelmed and business teams will build agents that cannot be supported.

Clear division of responsibilities is essential. The Center of Excellence defines design standards, guardrails, and platform strategy. Business teams own use case definition and domain knowledge. AI operations owns runtime monitoring, escalation, and reliability. Without these boundaries, every agent becomes a one off that requires bespoke support.

Key Insights:

- Scale depends on clear responsibility splits across the Center of Excellence, business teams, and AI operations

- Intake forms should define ownership for development, testing, and long term support

- Unsupported vendor agents can burden operations if boundaries are not set early

- Centers of Excellence maintain architectural and governance authority without blocking innovation

- The operating model must cover build, deploy, support, retire, and update paths

5. Tooling decisions should be driven by use case categories rather than vendor momentum

Enterprises often evaluate agent platforms by looking at features, demos, or vendor roadmaps. Instead, the decision should come from understanding the categories of agentic work the organization needs to support. Maintenance diagnostics, customer service triage, financial workflow automation, and supply chain exception handling each have different requirements for autonomy, action scope, and integration.

Platforms should be selected to fit these clusters of use cases instead of trying to force a single vendor to handle all needs. If the organization identifies categories of agents it intends to support, platform decisions become clearer and more defensible.

Key Insights:

- Define clusters of agent use cases before selecting platforms

- High autonomy agents require deeper integration and centralized oversight

- Workflow contained agents may fit best inside vendor ecosystems

- Platform diversity is manageable when tied to clear use case categories

- Strategic clarity reduces vendor lock in and prevents premature platform commitments

Expert Network

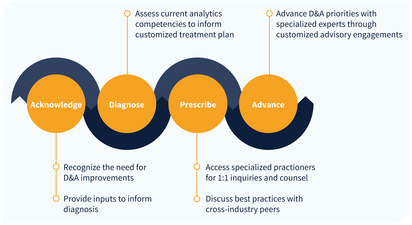

IIA provides guided access to our network of over 150 analytics thought leaders, practitioners, executives, data scientists, data engineers with curated, facilitated 1-on-1 interactions.

- Tailored support to address YOUR specific initiatives, projects and problems

- High-touch onboarding to curate 1-on-1 access to most relevant experts

- On-demand inquiry support

- Plan validation and ongoing guidance to advance analytics priority outcomes

- Monthly roundtables facilitated by IIA experts on the latest analytics trends and developments