Client Inquiry:

I lead AI governance at my organization, and our biggest challenge right now is figuring out how to manage the shift from traditional AI and automation into agentic AI. We already govern machine learning and generative models, but agentic AI introduces new questions about monitoring, orchestration, and oversight. We have agents being developed across AWS, Microsoft, Salesforce, and ServiceNow, and each platform wants to operate in its own silo.

I need clarity on what governance looks like for agentic systems, what parts of the lifecycle we should build internally, and where it makes more sense to rely on vendor platforms. I also need guidance on how to manage monitoring, metadata, tokenization costs, and cross-platform orchestration as our use cases scale. Ultimately, I need to understand how to support agentic AI responsibly without overextending internal teams or locking us into tools that cannot grow with us.

Expert Takeaways:

1. Agentic AI governance is a new discipline, so expect gaps, immaturity, and rapid change

Governance for agentic systems is not an extension of traditional MLOps. It introduces new questions about orchestration, cross-platform integration, and oversight of autonomous workflows that borrow elements from RPA, generative AI, and human decision paths. Every enterprise is still figuring it out, so confusion is normal.

Agentic AI adoption is growing faster than governance frameworks can keep up. As teams across the enterprise begin building their own agents, the organization needs a way to inventory, supervise, and validate them before use proliferates unchecked. The governance model must treat agentic systems like a new category of AI asset rather than an evolution of chatbots or automation.

Key Insights:

- Treat agentic oversight as a distinct governance layer, not an add-on to traditional ML governance

- Expect questions about appropriate use, reuse, and risk long before tooling matures

- Build a plan for how to monitor, inventory, and validate agents as they appear across teams

- Recognize that agentic systems borrow from RPA, ML, and GenAI, which complicates lifecycle management

- Assume that demand across the business will grow faster than internal readiness

2. A cross-platform governance layer is essential because agentic AI will not live in one system

Core enterprise platforms each want to be the primary home for agent development, but none of them can govern agents built in other ecosystems. AWS can support its own bots well, Salesforce can govern agents built in Salesforce, and Microsoft’s tools work best inside the Microsoft fabric. None of these can coordinate agents acting across multiple systems or provide a unified governance model for cross-functional workflows.

What the organization actually needs is a layer above the platforms that can inventory every agent, track who is using what, compare overlapping capabilities, and support oversight across the full ecosystem. Without that layer, the organization risks duplicate agents, hidden automation, inconsistent risk controls, and a lack of visibility into how AI is operating inside the business.

Key Insights:

- Platform-native agent tools cannot govern or orchestrate agents built outside their own environment

- Cross-platform visibility is necessary once finance, HR, operations, and engineering start developing their own agents

- A shared inventory of agents and their capabilities is required to reduce duplication

- Governance must follow the agent, not the platform it was created in

- Cross-functional orchestration becomes the central gap when multiple enterprise platforms introduce their own agent frameworks

3. A self-service layer can accelerate adoption while keeping governance intact

Teams across the business want to build agents, but most do not have the skills or context required to do it safely. A self-service layer can lower the barrier to entry through templates, metadata-driven design, and no-code interfaces. This protects governance by ensuring that teams do not bypass policies or create unmonitored agents.

The self-service model also helps differentiate between true agentic use cases and workflows that should remain simple automation. Many proposed “agents” are nothing more than conditional process flows, which can be built faster and cheaper through RPA or Power Automate. The governance intake process should explicitly separate the two.

Key Insights:

- Self-service agent creation requires a structured metadata layer to maintain consistency

- No-code tools reduce dependence on engineering and AI specialists

- Many proposed agent use cases are better suited to low-cost automation

- Governance should guide teams through a decision process to match automation type to business need

- A self-service catalog helps minimize shadow AI while enabling controlled experimentation

4. Cost governance, especially tokenization, must be addressed early before usage surges

Agentic systems can generate significant compute consumption because each action may trigger multiple LLM queries. Without cost transparency, teams will choose the most exciting tool rather than the most efficient one. Governance must include a consistent way to evaluate token consumption, compare RPA to agentic workflows, and communicate the financial impact of design choices.

Tokenization, AI Builder credits, and platform-specific pricing models all create cost variability. The governance team needs a unified framework for comparing alternatives so that decision makers understand the tradeoffs.

Key Insights:

- Agentic AI can become expensive quickly when LLM calls drive each step of the workflow

- Many processes are cheaper to implement with RPA or deterministic logic

- Evaluation of use cases should include a standard method for comparing token, credit, or compute costs

- Cost clarity helps curb unnecessary demand for agentic solutions

- Training on prompt discipline and query quality reduces redundant or excessive LLM calls

5. A central inventory and monitoring framework becomes the backbone of agentic AI governance

As more teams develop agents, the risk is not just technical. It becomes harder to know which agent is doing what, whether multiple teams are creating redundant tools, and whether an agent is being used outside its intended scope. A shared inventory acts as the anchor for oversight, and monitoring can ensure that agents continue to behave within acceptable boundaries.

The governance model must include visibility into adoption, lineage, data access, and performance. This is especially important once agents begin using data from multiple platforms, including customer-facing systems like Salesforce and operational systems in AWS.

Key Insights:

- A shared registry of agents prevents redundancy and shadow development

- Monitoring must cover performance, drift, data access, and usage patterns

- Governance needs to detect when other teams begin using an agent built for a different purpose

- Inventorying agents is no different from inventorying ML models and should follow similar disciplines

- A centralized AI governance function is essential for coordinating oversight across platforms and teams

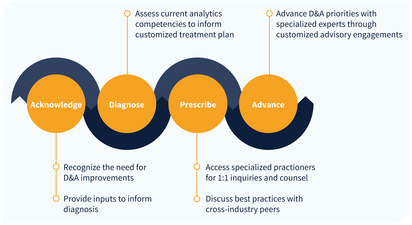

Expert Network

IIA provides guided access to our network of over 150 analytics thought leaders, practitioners, executives, data scientists, data engineers with curated, facilitated 1-on-1 interactions.

- Tailored support to address YOUR specific initiatives, projects and problems

- High-touch onboarding to curate 1-on-1 access to most relevant experts

- On-demand inquiry support

- Plan validation and ongoing guidance to advance analytics priority outcomes

- Monthly roundtables facilitated by IIA experts on the latest analytics trends and developments