Client Inquiry

I lead AI business transformation within a large aftermarket and customer support organization. We support thousands of employees across many different roles, and we are responsible for getting AI into customer-facing products while also transforming how internal teams work. We have had some success with Copilot and citizen development, but adoption is inconsistent, experiments are scattered, and we do not have a clear structure for scaling AI across functions.

We are exploring whether domain-specific centers of excellence, expert pods, or other operating models can help us move faster. How are leading organizations structuring their teams, talent, governance, and guardrails to drive meaningful AI transformation at scale?

Expert Takeaways:

1. Real AI transformation requires a structured operating model, not scattered experimentation

AI will not spread organically, even in organizations with strong enthusiasm and executive support. Without structural scaffolding such as defined roles, repeatable patterns, reusable components, and clear ownership, teams experiment in isolated pockets that never compound into enterprise value. The companies that are furthest ahead treat AI transformation as an operating model redesign rather than a tooling rollout.

AI needs a home. It needs leadership. It needs a model that determines how work flows, who builds what, who supports what, and how value is measured. When that structure is missing, organizations see high activity and low impact.

Key Insights:

- AI adoption accelerates only when the operating model tells people where to go for guidance, support, and decisions

- Tools like Copilot spread quickly, but the underlying transformation stalls without defined workflows

- Enterprise AI requires repeatable patterns, reusable assets, and shared components

- Without structure, every function builds AI differently, which creates duplication and inconsistent quality

- Successful organizations treat AI as a cross functional capability that reshapes how work is done

2. Centers of Excellence work only when paired with embedded domain pods

A centralized COE provides expertise, standards, and coordination, but it cannot drive transformation alone. The real progress happens when expertise lands inside business domains through embedded pods. These pods are small cross functional groups that understand the work, the data, and the decisions of each team.

The COE sets the scaffolding. The domains generate the momentum. This hybrid structure gives people close to the work the support they need while preventing the fragmentation that happens when every team invents its own approach.

Key Insights:

- A COE should own standards, platform choices, guardrails, and architectural patterns

- Embedded pods translate those patterns into real workflows and role changes

- Pods pair technical talent with domain leaders to redesign work, not simply automate tasks

- The COE provides reusable templates and components so pods do not start from scratch

- Hybrid structures scale faster than purely centralized or decentralized models

3. Role redesign is the primary lever for value and it requires hands-on partnership

Improving productivity through Copilot is the easy part. Transforming roles is the real work. Every function has dozens of hidden, manual, repetitive activities that AI can reduce or eliminate. People do not naturally identify these opportunities on their own. They need guided discovery, facilitated design sessions, and structure for rethinking workflows.

When organizations skip role redesign, they get lightweight wins that do not scale and do not change how the business operates. When they invest in it, they uncover some of the highest value opportunities in the enterprise.

Key Insights:

- Role decomposition sessions expose the real opportunities for AI enabled redesign

- Many hidden tasks can be automated or triaged with AI, but teams rarely surface them without facilitation

- Productivity gains come from rethinking entire workflows rather than injecting AI into isolated steps

- Role redesign works best when experts sit with teams and map real work rather than theoretical processes

- Change sticks only when redesigned roles align with incentives, KPIs, and accountability structures

4. Citizen development cannot scale without patterns, guardrails, and shared components

Many companies assume that giving people Copilot, Power Apps, or low code tools will unlock a wave of innovation. In reality, without patterns and guardrails, citizen development creates risk, redundancy, and support burdens that technical teams cannot absorb.

The goal is not to stop citizen developers. It is to channel their energy into governed, repeatable pathways. The organizations that make this work offer curated libraries, vetted components, templated architectures, and simple intake processes that prevent chaos and still encourage creativity.

- Citizen developers need clear lanes for what they can build, what requires review, and what must go through technical teams

- Reusable components maintain quality and reduce long term support costs

- A structured intake process keeps experimentation aligned with enterprise priorities

- Guardrails prevent functions from building unsupported agents, models, or workflows

- Adoption increases when citizen developers have a safe, supported environment to build in

5. AI transformation succeeds when operating model, governance, and culture evolve together

Operating model decisions cannot be isolated from governance or culture. AI introduces new risks such as rogue agents, inconsistent outputs, quality issues, and unclear ownership when workflows automate themselves. Without governance woven into the operating model, teams move fast and break things, sometimes in production.

Culturally, teams need clarity about what AI can do, what AI should not do, and how their roles will evolve. Without this alignment, adoption fractures, anxiety increases, and transformation slows.

Key Insights:

- Governance must be embedded into the operating model instead of bolted on after deployment

- Automated workflows require monitoring, versioning, and clear escalation paths

- Teams need clarity on what constitutes safe versus unsafe AI usage

- Organizational norms must evolve alongside new tools and capabilities

- The operating model, governance strategy, and change management plan must reinforce one another

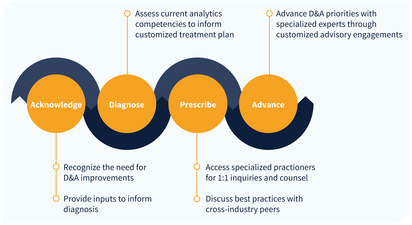

Expert Network

IIA provides guided access to our network of over 150 analytics thought leaders, practitioners, executives, data scientists, data engineers with curated, facilitated 1-on-1 interactions.

- Tailored support to address YOUR specific initiatives, projects and problems

- High-touch onboarding to curate 1-on-1 access to most relevant experts

- On-demand inquiry support

- Plan validation and ongoing guidance to advance analytics priority outcomes

- Monthly roundtables facilitated by IIA experts on the latest analytics trends and developments