Client Inquiry:

I lead data governance at my organization and need guidance on how governance should evolve to meet the demands of AI. Historically, governance initiatives here have stalled due to low adoption, unclear value, and repeated false starts over the course of decades. Engineering teams do not see themselves as data owners or stewards, and they do not use our catalog or quality tools.

My core questions are:

How are leading organizations modernizing data governance to support AI? Where can AI realistically accelerate governance, and where is it still unreliable? And how do I help engineering adopt governance by solving real problems like findability, usability, and trust rather than repeating past, theory-heavy governance programs?

Expert Takeaways:

1. AI can support governance, but it is not yet a true accelerator

Despite industry enthusiasm, AI has not meaningfully sped up the core work of governance. Automation looks promising on paper, but reliability fades once tasks require nuance, context, or domain knowledge. This is particularly true in engineering and compliance environments where dependencies and rules are complex.

Even when AI produces usable results, consistency is a recurring issue. Identical inputs often yield different outputs. That variability makes automated metadata tagging, lineage mapping, or quality assessments difficult to trust without human review. And if human oversight outweighs the time saved, automation creates more work instead of reducing it.

AI still helps, but only in narrow areas where the cost of error is low and the output does not require deep reasoning or precision.

Key Insights:

- Helpful for simple extraction, summarization, and basic document analysis

- Unreliable when tasks require causality, reasoning, or domain-heavy interpretation

- Categorization and classification often remain inconsistent

- Human review is required for any task tied to accuracy, risk, or regulatory obligations

- Best positioned as a selective productivity aid instead of a governance engine

2. Governance problems are usually system problems rather than tooling problems

Most governance failures come from fragmented systems and disconnected workflows, not from a lack of catalogs or policies. Engineering environments often include dozens of tools and data stores, each optimized for a single function but not for the entire flow of work.

When systems do not integrate, no amount of policy can repair the gaps. People struggle not because a policy is missing, but because information is hard to find, hard to access, and hard to trust at the moment they need it.

Effective governance begins by understanding how work actually moves across systems and where friction appears. Architecture, integration, and information design often matter more than governance documentation.

Key Insights:

- Fragmentation, not policy, is the primary barrier to findability and usability

- Engineering tasks often require information that spans products, processes, and repositories

- Many catalogs fail because discovery does not solve the deeper access and stitching problems

- Mapping real workflows exposes the true governance requirements

- System alignment is frequently the prerequisite to governance adoption

3. Findability, not ownership, is the engineering team’s core pain point

Engineering teams rarely identify as data stewards, and forcing stewardship language on them is ineffective. Their central need is much simpler: the ability to quickly find the right information and understand how to use it.

Traditional governance asks engineers to adopt new responsibilities without addressing the underlying problems that make work difficult. Progress begins by observing how engineers search for information and where systems slow them down.

Governance gains traction when it makes daily work easier through better access, clearer documentation, and improved usability.

Key Insights:

- Engineers prioritize findability and usability over stewardship definitions

- Catalogs often fail because they do not solve the deeper discovery and access issues

- Workflow mapping helps reveal where retrieval and context break down

- Integration and automation can reduce dependence on manual access requests

- Governance should improve daily engineering work, not add overhead

4. Repeated governance failures create cultural fatigue, so solve a real problem first

Decades of stalled or abandoned governance programs leave behind skepticism that makes new initiatives hard to launch. When people believe governance never works in their organization, they disengage before a program even begins.

The path forward is to stop starting with theory and instead begin with a practical workflow that repeatedly causes frustration, rework, or unnecessary delay. Fix that workflow fully, and then connect the improvement back to governance principles. Value becomes visible through experience instead of documentation.

Early wins tied to real work build credibility and show that governance is an operational enabler rather than a bureaucratic exercise.

Key Insights:

- Long histories of failed governance efforts create resistance that frameworks cannot overcome

- Theory-first governance does not resonate with engineering teams

- Begin with a specific, painful workflow and fix it end to end

- Use the improvement to demonstrate the operational value of governance

- Momentum grows through demonstrated value rather than policy language

5. Scaling governance requires rethinking incentives and redefining ownership paths

Governance often breaks down because the people who discover data issues are not the people empowered to fix them, and the teams who own the systems do not feel accountable for usability or data quality.

This misalignment leads to slow approvals, blocked requests, and unclear responsibilities. No governance tool can overcome this without addressing the operating model beneath it.

Governance scales when incentives, responsibilities, and workflows encourage issues to be resolved as close as possible to where they surface. This can require revisiting organizational structures, handoff paths, and the division of responsibilities between engineering and IT.

Key Insights:

- The people who identify problems often lack authority to resolve them

- Ticket-driven workflows create delays and frustration

- Incentives must reinforce shared responsibility for data quality and accessibility

- Informal problem-solvers are often the best candidates for governance champions

- Governance maturity depends on aligning ownership and incentives, not just tools

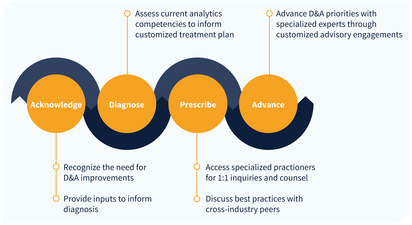

Expert Network

IIA provides guided access to our network of over 150 analytics thought leaders, practitioners, executives, data scientists, data engineers with curated, facilitated 1-on-1 interactions.

- Tailored support to address YOUR specific initiatives, projects and problems

- High-touch onboarding to curate 1-on-1 access to most relevant experts

- On-demand inquiry support

- Plan validation and ongoing guidance to advance analytics priority outcomes

- Monthly roundtables facilitated by IIA experts on the latest analytics trends and developments