Part 1: Enterprise Generative AI: Patterns, Cycles and Strategies for Deriving Business Value

In this article, we will explore some optional paths to increased enterprise and production scale maturity in the journey of adopting generative AI. How do we scale our applications from research to prototype to production? Consider these patterns as maturing the development process to move toward enterprise-scale production.

We will explore a non-exhaustive list of techniques that are often combined to make composite patterns of how you deal with typical problems and challenges encountered as you seek to adopt generative AI at the enterprise level.

You can use this as a checklist for patterns to adopt for using generative AI at a production scale in an enterprise or industrial environment. Also, you can use this to prepare your enterprise for generative AI through awareness of some of the many skills you may need to overcome common challenges in the journey of its adoption.

Fundamentally, ML is about creating or selecting a model, seeing how it performs on some data, as it attempts to predict or generate some downstream task. However, this process is inherently experimental and iterative, much like the machine learning algorithms themselves, which employ backpropagation to converge toward an improved set of weights that reduce the loss function for the downstream task.

Iterations and cycles: Langchain has been a highly popular and useful library to help create a chain of tasks for generative AI. But these chains are not one-and-done. They are fundamentally an experiment and so we must prepare ourselves, our teams, and our enterprises for cycles of these chains and iterate on our experiments as we progress through these chains of tasks.

Let’s explore some frequently encountered solutions to problems in context: patterns. The patterns we will cover here are more iteration patterns or cycle patterns – iterations or “cycles” in your chain of tasks. For example, the chain that starts with you prompting a language model to obtain a completion or “get an answer” from an LLM (large Language model). It’s important that we establish a cycle and evaluate the outcome and iterate. I've structured it as a progression toward more advanced strategies and patterns for completing tasks assigned to the LLM, increasing in complexity and sophistication.

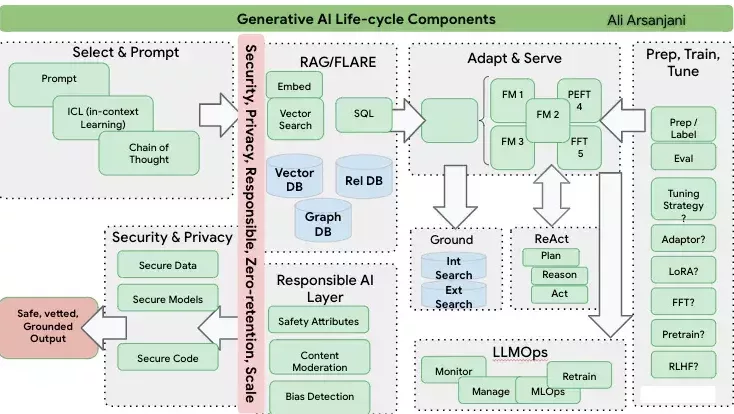

Below is a diagram that includes almost all the cycles and iterations we discuss in this article. Please use it as an indicative reference for the art of the possible and adapt to your specific enterprise needs.

These cycles collectively represent more sophisticated or more mature ways of handling the complexities of generative AI for the enterprise.

Each of the subsequent “cycles” comprises:

- prompt → FM → completion

expanded into - prompt → Tuned Model → Completion,

- prompt → RAG | FLARE→ Model → Completion,

- prompt → Model → Completion → Grounding,

- prompt → ToT → Model → ToT → Completion steps.

For example, let’s start with a simple one:

Prompt → Foundation Model → Adaptation → Completion

(“Translate to French”) (Initial Translation) (Validation) (“Bonjour, comment ça va?”)

Maturity Level 1: Prompt, In-Context Learning, and Chaining

Prompt it and TICL it (Textual In-Context Learning)

Select a model, prompt it, get a response, assess the response, re-prompt until your responses cumulatively give you what you want: a product description, a summary with a certain format, a SQL statement that runs, python code that is generated, etc.

In-context learning (ICL) is a method of prompt engineering that allows language models to learn tasks from a few examples. In this method, a model is given a prompt with examples of a task in natural language. The model learns to solve the task without any change to its weights. ICL has become a new paradigm for NLP.

ICL has very similar goals to few-shot learning: to enable models to learn contextual data without extensive tuning. However, fine-tuning a model involves a supervised learning setup on a target dataset. In ICL, a model is prompted with a series of input–label pairs without updating the model’s parameters.

Experiences have shown that LLMs can perform quite an array of complex tasks through ICL, even as complex as solving mathematical reasoning problems [1].

Chain it:

Beyond the basic Prompt → FM → Adapt → Completion pattern, we typically need to extract data from somewhere, maybe run a predictive AI algorithm and then send the results to a generative AI foundational model. This Chain of TAsks (CoTA, to be distinguished from Chain of Thought, CoT) pattern is exemplified as:

Chain: extract Data/ Analytics → Run a Predictive [set of] ML Model[s] → Send the Result to an LLM → Generate an output

Example: Marketing Activation. Start by running a SQL statement against, say, BigQuery to get the segments of customers you wish to reach out to in a marketing campaign. Next, run a predictive AI ranking algorithm to get the top N customers in the segments, or get the top set of micro-segments. Next, send the data of top segments or top customers in a segment to the LLM for a generative AI step that will produce a personalized marketing social media post or email to those segments of customers, increasing the chances they will be more responsive with a personalized reach out.

You can use a library like LangChain to accomplish many of these tasks in the chain. LangChain includes models, chains, and agents.

- Models. LangChain supports a variety of LLMs, including Google Vertex AI, OpenAI, and Hugging Face models.

- Chains. Chains are sequences of operations that LangChain can perform on text or other data. Chains can be used to perform tasks such as text analysis, summarization, and translation.

- Agents. Agents are programs that use LLMs to make decisions and take actions. Agents can be used to build applications such as chatbots and code analysis tools.

LangChain also provides integrations with other tools and APIs as well as end-to-end chains of tasks needed to complete a workflow. For example:

- Integrations with other tools: LangChain can be integrated with other tools, such as Google Search and Python REPL, to extend its capabilities.

- End-to-end chains for common applications: LangChain provides pre-built chains for common applications, such as document analysis and summarization.

LangChain agents are particularly powerful because they can use LLMs to make decisions and take actions in a dynamic and data-driven way. For example, a LangChain agent could be used to build a chatbot that can learn from its interactions with users and improve its performance over time.

LangChain can be used for a variety of use-cases. For example:

- Document analysis and summarization: LangChain can be used to analyze and summarize documents, such as legal documents or scientific papers.

- Chatbots: LangChain can be used to build chatbots that can interact with users in a natural and informative way.

- Code analysis: LangChain can be used to analyze code and identify potential bugs or security vulnerabilities.

Overall, LangChain is a powerful framework that can be used to build a wide variety of applications using LLMs. It is particularly well-suited for building dynamic and data-responsive applications.

LangChain agents use an LLM to decide what actions to take and the order to take them in. They make future decisions by observing the outcome of prior actions. This allows LangChain agents to learn and adapt over time, becoming more effective at completing tasks.

LangChain agents can be used to build a variety of applications, such as chatbots, code analysis tools, and customer service assistants. They are particularly well-suited for tasks that require reasoning, planning, and decision-making.

Maturity Level 2. The above section is a very typical set of patterns used in an iterative cycle to leverage generative AI. Now let’s explore a more mature level that augments the above.

Tune it:

As you evaluate the model's response, you may find it inadequate even after putting substantial effort into prompt engineering and in-context learning. Here you may need to tune the foundation model: adapt it to a domain, an industry, a type of output format, a certain brevity as opposed to rambling outputs (e.g., classifying a set of symptoms).

Parameter-efficient fine-tuning (PEFT) is a technique for fine-tuning LLMs that is less computationally expensive than traditional fine-tuning. PEFT works by fine-tuning only a subset of the LLM’s parameters. This can be done by using a technique called adaptor tuning, or by using a technique called LoRA (Low-Rank Adaptation of Large Language Models).

Adaptor tuning involves adding a new layer to the LLM that is specific to the task at hand. The new layer is trained on a small dataset of labeled examples. This allows the LLM to learn the specific features of the task without having to fine-tune all its parameters.

LoRA involves approximating the LLM’s parameters with a low-rank matrix. This can be done by using a technique called matrix factorization. The low-rank matrix is then fine-tuned on a small dataset of labeled examples. This allows the LLM to learn the specific features of the task without having to fine-tune all its parameters.

Full fine-tuning is the traditional approach to fine-tuning LLMs. In full fine-tuning, all the LLM’s parameters are fine-tuned on a large dataset of labeled examples. This can be computationally expensive, but it can lead to the best performance on the target task.

This allows for the introduction of domain-specific LLMs, which is very important. For example, see how Vertex AI can do this for you at a nominal cost.

Reinforcement Learning from Human Feedback (RLHF) can be used to further enhance the fine tuning. More on that in Part 2.

Maturity Level 3. Now let’s retrieve data before we send the prompt and contextualize the input even more, decreasing the likelihood of hallucination by the LLM.

RAG it:

This approach allows you to access similar documents using semantic search. How is this done? You supply a set of documents, which are then chunked (read “split”) sentence by sentence, paragraph by paragraph, or by page. Afterward, they are converted into an embedding using a vector embedding tool like textembedding-gecko@latest and stored in a vector database such as Google’s Vertex Vector Search. The retrieval is done via an Approximate Nearest Neighbor search (ANN) – a.k.a. semantic search algorithm. This input may significantly decrease the possibility of the model’s hallucination and provide the model with enough relevant context to be more knowledgeable about the topic and return more “sensible” and relevant completions. This process is known as Retrieval Augmented Generation or RAG. So, RAG it.

RAG works by:

- Creating an initial prompt from the user’s query or statement.

- Augmenting the prompt with context retrieved from the vector store.

- Sending the augmented prompt to the LLM.

Ground it:

Use an expanded search capability to increase the factual grounding by allowing/requesting the model to return a reference to where it found the responses it just gave. RAG does provide grounding, prior the submission to the LLM. Grounding occurs after the model issues the output tokens; it involves finding a citation and sending it back. Many vendors such as Google Cloud AI provide multiple ways of Factual Grounding.

Note: Factual Grounding vs RAG

Factual grounding and RAG are both approaches to improving the accuracy and relevance of LLMs. However, they have different goals and use different techniques. Factual grounding is the process of ensuring that an LLM’s generated text is consistent with factual knowledge. This can be done by providing the LLM with access to a knowledge base of factual statements, and by training the LLM to generate text that is consistent with these statements.

RAG is a framework for augmenting LLMs with access to external knowledge bases. This allows LLMs to generate more accurate and informative text, even on complex and challenging tasks. RAG works by first retrieving relevant passages from the knowledge base. The LLM then uses these passages to generate its response.

The main difference between factual grounding and RAG is that factual grounding focuses on ensuring that the LLM’s generated text is consistent with factual knowledge, while RAG focuses on generating more accurate and informative text. Also, factual grounding typically uses a knowledge base of factual statements, while RAG can use any type of external knowledge base, including text documents, code repositories, and databases.

Factual grounding is typically used as a pre-training step, while RAG can be used as a post-training step. This means that factual grounding is typically used to improve the accuracy and relevance of LLMs on a variety of tasks, while RAG is typically used to improve the accuracy and relevance of LLMs on specific tasks.

FLARE it:

Forward-looking Active Retrieval Augmented Generation. FLARE is a variation of RAG in which you proactively decide when and what to retrieve. It uses a prediction of the upcoming sentence to anticipate future content and utilize it as the query to retrieve relevant documents. This is done when you determine that the retrieved documents contain low-confidence tokens.

Maturity level 4. We are getting into a very interesting domain here where you can start to as your LLM for how it is reasoning and what are the steps in accomplishing its task.

CoT it or ToT it. GoT it?

Use a prompt to derive the Chain/Tree/Graph of Thought output as a set of steps. Each step can use RAG actively to pull from documents in a vector database. ToT maintains a tree of thoughts, where thoughts represent coherent language sequences that are the reflection of how the LLM is “thinking” about the set of intermediate steps that it would use to solve your problem. This approach enables an LLM to self-evaluate the progress of intermediate steps/thoughts it makes toward solving a problem through a deliberate reasoning process.

The Tree of Thoughts (ToT) framework is a new approach to AI reasoning. It’s different from the Chain of Thought (CoT) approach, which guides language models along a single path. In a CoT diagram, each sentence is a direct continuation of the previous one. In a ToT diagram, the main idea branches off into several related ideas.

In a ToT diagram, each node is a “thought.” A thought is a coherent chunk of text that represents an intermediate reasoning step. This allows the language model to explore multiple reasoning paths and evaluate the progress of different thoughts toward solving the problem.

In a CoT diagram, each sentence is a direct continuation of the previous one. This forms a linear chain.

Tree of Thoughts allows multiple step analysis, multiple comparisons, and increased options after each step. It also allows the system to restart at the first or earlier steps to reconsider new options.

OK, you get the idea for the Graph of Thought. GoT it?

Graph of Thought (GoT) is a framework that models the reasoning process of large language models (LLMs) as a graph. In a Chain of Thought, each sentence is a direct continuation of the previous one, forming a linear chain. In a Tree of Thought, the main idea branches off into several related ideas.

GoT allows for dynamic data flow without a fixed sequence. This flexibility is important in AI, where data can come from multiple sources and may need to be processed non-linearly.

GoT models each thought generated by an LLM as a node within a graph. Dependencies are represented by vertices that connect these nodes. This allows prompting the LLM to solve problems through networked, non-linear reasoning.

This strategy can be considered to be a generalization of Chain of Thoughts and Tree of Thoughts. Additionally, it offers more flexibility, such as refining a single thought and aggregating multiple thoughts.

Chain it.

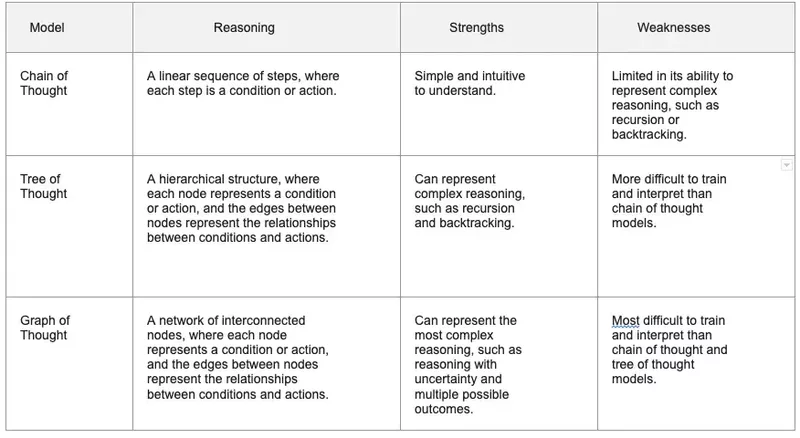

Let’s explain this table.

The Chain of Thought model does very well in its simplicity, intuitiveness, and ease of training. It follows a linear, step-by-step process that is good for tasks naturally aligned with sequential logic. This imposes the limitations on the model’s ability to handle complex reasoning tasks that may very well require considering multiple variables or alternative options or outcomes. Once it sets “its mind” on a particular ‘chain,’ the model may find it challenging to backtrack or explore other avenues, which may lead to less-than-optimal outcomes.

The Tree of Thought model is characterized by its ability to represent complex reasoning in a hierarchical manner. This structure enables it to tackle multi-faceted problems by branching out into sub-problems or conditions. Its structured approach also makes it relatively easier to interpret compared to more complex models. This additional complexity is at the cost of higher computational needs and a risk of possibly overfitting. Its branching can make it harder to trace the model’s exact reasoning path, which not a great help when it comes to its interpretability.

The Graph of Thought model stands out for its ability to handle high-complexity tasks involving multiple interconnected variables. Its flexibility allows it to model non-linear and interconnected relationships, making it highly suitable for real-world problems with complex, interrelated variables. However, this complexity demands significant computational resources and sophisticated algorithms for effective training. The Graph of Thought model is also the most challenging to interpret; its non-linear interconnected structure doesn’t lend itself to straightforward explanations, making it difficult to understand the reasoning behind its decisions and use it for explainability.

ReAct — Plan it:

Don’t just get data and pass to the LLM so it doesn’t look stupid and make things up when it doesn’t know your domain (RAG), but actually let the LLM make an external call via an API, for example, to retrieve information as it is deciding on — reasoning — what to tell you about your prompt. ReAct is a method that combines acting and reasoning to help LLMs “learn” new tasks and make decisions. In using this technique or strategy we prompt LLMs to generate verbal reasoning traces and actions for a task. This allows the model to perform dynamic reasoning to create, maintain, and adjust high-level plans for acting.

ReAct is designed for tasks in which the LLM is allowed to perform certain actions. For example, a LLM may be able to interact with external APIs to retrieve information. It addresses issues that LLMs sometimes face, like producing incorrect facts and compounding errors.

Conclusion

This article reviewed a set of patterns, including combining techniques that are commonly encountered as you progress through the journey of deriving business value from generative AI. These patterns aim to make generative AI enterprise-ready and, conversely, help you mature the enterprise from a prototype to a product with generative AI.

This article was originally published on Medium.

Topics and References

I have covered or alluded to the following topics in this article.

- [Textual] In-Context Learning (TICL). Okay, I made the [textual] word up to rhyme and make it a semantic verb aligned with the rest. guilty :-) !

- Few-shot learning

- Supervised learning

- Retrieval Augmented Generation (RAG)

- FLARE, a form of Active RAG.

- Factual grounding

- Chain of Thought (CoT)

- Tree of Thoughts (ToT)

- Graph of Thought (GoT)

- ReAct: Reasoning & Action.

Here are some specific references that you may find helpful.

[1] LLMs can perform complex tasks through ICL, even as complex as solving mathematical reasoning problems:

This paper shows that LLMs can be fine-tuned to perform complex tasks with only a few examples, using a technique called chain of thought prompting. The authors demonstrate that LLMs can be used to solve mathematical reasoning problems, translate languages, and perform other complex tasks with high accuracy.

This survey paper provides a comprehensive overview of ICL for LLMs. The authors discuss the different ways in which ICL can be used to train LLMs to perform new tasks, and they provide examples of ICL being used to solve complex tasks such as mathematical reasoning and code generation.

This paper introduces the chain of thought prompting technique for training LLMs to perform complex tasks. The authors demonstrate that LLMs trained with chain of thought prompting can solve mathematical reasoning problems, even when the problems are presented in a new format. They “explore how generating a chain of thought — a series of intermediate reasoning steps — significantly improves the ability of large language models to perform complex reasoning.” In particular, they show how such reasoning capabilities are an emergent behavior that surfaces “naturally in sufficiently large language models, where a few chain of thought demonstrations are provided as exemplars in prompting. Experiments on three large language models show that chain of thought prompting improves performance on a range of arithmetic, commonsense, and symbolic reasoning tasks.”

[2] (Textual) In-Context Learning (TICL)

- Brown, Tom B., et al. “Language models are few-shot learners.” arXiv preprint arXiv:2005.14165 (2020).

- Raffel, Colin, et al. “Exploring the limits of transfer learning with a unified text-to-text transformer.” arXiv preprint arXiv:1910.10683 (2019).

[3] Few-shot learning

Prototypical networks are a type of prototype classifier that is used for few-shot learning. Few-shot learning is a classification technique that uses a small dataset to adapt to a specific task. Prototypical networks are based on the idea that each class can be represented by the mean of its examples in a representation space learned by a neural network.

- Snell, Jake, Kevin Swersky, and Samy Bengio. “Prototypical networks for few-shot learning.” arXiv preprint arXiv:1703.05175 (2017).

The authors propose a model-agnostic algorithm for meta-learning: it is compatible with any model trained with gradient descent and applicable to a variety of different learning problems, including classification, regression, and reinforcement learning. They propose a meta-learning algorithm that works with any gradient-trained model and can be applied to a variety of learning tasks, including classification, regression, and reinforcement learning.

The goal of meta-learning is to train a model on a variety of tasks so that it can quickly learn new tasks with only a few training examples. In this approach, the model parameters are trained to be easy to fine-tune with a small number of gradient steps on a new task.

They demonstrate that their approach achieves state-of-the-art results on two few-shot image classification benchmarks, performs well on few-shot regression, and accelerates fine-tuning for policy gradient reinforcement learning with neural network policies.

- Finn, Chelsea, et al. “Model-agnostic meta-learning for fast adaptation of deep networks.”

[4] Supervised learning.

- Murphy, Kevin P. Machine learning: a probabilistic perspective. MIT press, 2012.

- Hastie, Trevor, Robert Tibshirani, and Jerome Friedman. The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media, 2009.

[5] Retrieval Augmented Generation (RAG)

The paper on retrieval augmented generation (RAG) was written by Patrick Lewis, et al. RAG is a framework for augmenting large language models (LLMs) with access to external knowledge bases. This allows LLMs to generate more accurate and informative text, even on complex and challenging tasks.

RAG has been shown to be effective for a variety of tasks, including question-answering, summarization, and translation. It is a promising new approach to generative AI, and it has the potential to revolutionize the way we interact with computers.

- Lewis, Patrick, et al. “Retrieval-augmented generation for text summarization.”

- Fan, Angela, et al. “RAG: Retrieval augmented generation for knowledge-intensive NLP tasks.”

[6] Factual grounding

- Yi Tay, et al.“Check Your Facts and Try Again: Improving Large Language Models with External Knowledge and Automated Feedback.” This paper proposes a method for improving the factual accuracy of LLMs by providing them with feedback on their generated text. The feedback is based on a knowledge base of factual statements.

- Wang, Xuezhi, et al. “Chain of thought prompting elicits reasoning in large language models.”

[7] Chain of Thought (CoT)

The paper explores how generating a chain of thought — a series of intermediate reasoning steps — significantly improves the ability of large language models to perform complex reasoning.

The authors propose the chain-of-thought prompting technique, which enables large language models to accurately perform complex reasoning tasks using only a few intermediate reasoning steps without explicit training. Key insights and lessons learned from the paper are that large language models can reason at a comparable level to models that have been extensively trained and that the chain-of-thought prompting technique is a simpler and more scalable mechanism for encoding reasoning steps into language models.

- Wei, Jason, et al. “Chain of thought prompting elicits reasoning in large language models.”

[8] Tree of Thoughts (ToT)

- Shunyu Yao, et al. “Tree of Thoughts: Deliberate Problem Solving with Large Language Models.” arXiv preprint arXiv:2209.06289 (2022).

- Jieyi Long. “Large Language Model Guided Tree-of-Thought.”

[9] Graph of Thought (GoT)

[10] ReAct: Reasoning and Action

- Denny Zhou et al. “Least-to-Most Prompting Enables Complex Reasoning in Large Language Models.”

- Shunyu Yao, et al. “ReAct: Synergizing Reasoning and Acting in Language Models.” Also, See this official blog.

[11] Applications of chains of tasks.