Europe’s GDPR. California’s CCPA. The AI Act. The Blueprint for an AI Bill of Rights. New York’s City Local Law 144. The Algorithmic Accountability Act. The American Data Protection and Privacy Act. The Algorithmic Justice and Online Platform Transparency Act. Protecting Americans from Dangerous Algorithms Act. Age-Appropriate Design Code. Kids Online Safety Act…

Safe to say, AI legislation is no longer something that is coming. It is here.

But what does this mean in practice for enterprise analytics organizations and the leaders trying to make sense of this landscape? How best to react? How to get in front of regulations – and emerging AI technologies? How to develop a fully integrated framework that is driven by company values and fosters a holistic analytics governance program?

In order to help our community in this rapidly changing space, IIA recently held a webinar on the topic of “2023 AI Legislation and Ethics Best Practices.” Led by Carole Piovesan, managing partner at INQ Law and Onyinyechi Daniel, VP of data and analytics strategy and partnership at Highmark Health, the session provided an update on the latest legislative trends and strategies for getting in front of upcoming requirements.

In conjunction with the webinar, we conducted a flash survey to understand current feelings and practices on AI legislation and digital ethics. In this blog, we’ll touch on the current state of the regulatory environment, explore where organizations stand today, and make a few recommendations for taking the next step in your responsible analytics governance journey.

The Current Regulatory Environment

We are all familiar with the major privacy regulations that have been passed in recent years. From Europe’s GDPR (General Data Protection Regulation) to California’s CCPA (California Consumer Privacy Act), governments have stepped in to ensure that their citizens’ sensitive information is protected. These laws were enacted in response to the explosive use of data and technology over the past few decades.

Like the pattern we saw with privacy regulations, concerns about the appropriate usage of AI continues to grow as AI technologies become more prevalent in a business setting. And governments are responding in kind.

The first wave of legislation focused on the ethical use of AI has arrived. In the US alone, approximately 130 bills were passed or proposed in 2021 that were related to AI. This is a comparison to a single bill being passed just a few years prior.

Here are some of the major regulations and blueprints to be aware of (see “Related Resources” section at the end of this article to explore further):

- The European Union has recently drafted an AI Act. Though not a law yet, it will be and could even be voted upon in the coming weeks.

- The Biden administration published a Blueprint for an AI Bill of Rights. While this is not a law, its purpose is to prompt congressional action, and many elements of the Bill of Rights will likely appear in future legislation.

- The New York City Local Law 144 has made it unlawful to use automated employment decision tools (AEDTs) unless certain bias audit and notice requirements are met. Although, enforcement has been delayed and is currently scheduled to begin in April.

- Industry groups have begun to publish standards to help guide organizations. For example, IEEE has created a Trusted Data and Artificial Intelligence Systems for Financial Services Playbook and the OECD has an influential set of values-based AI principles

Where Organizations Are Today: Flash Survey Results

In conjunction with the webinar mentioned above, IIA surveyed our community about their experience and concerns with AI regulations and digital ethics. Let’s take a look at the results.

First, some good news. A relatively small percentage of respondents – approximately 10% – indicated that their organization had been directly impacted by AI-related concerns or controversies in the past year.

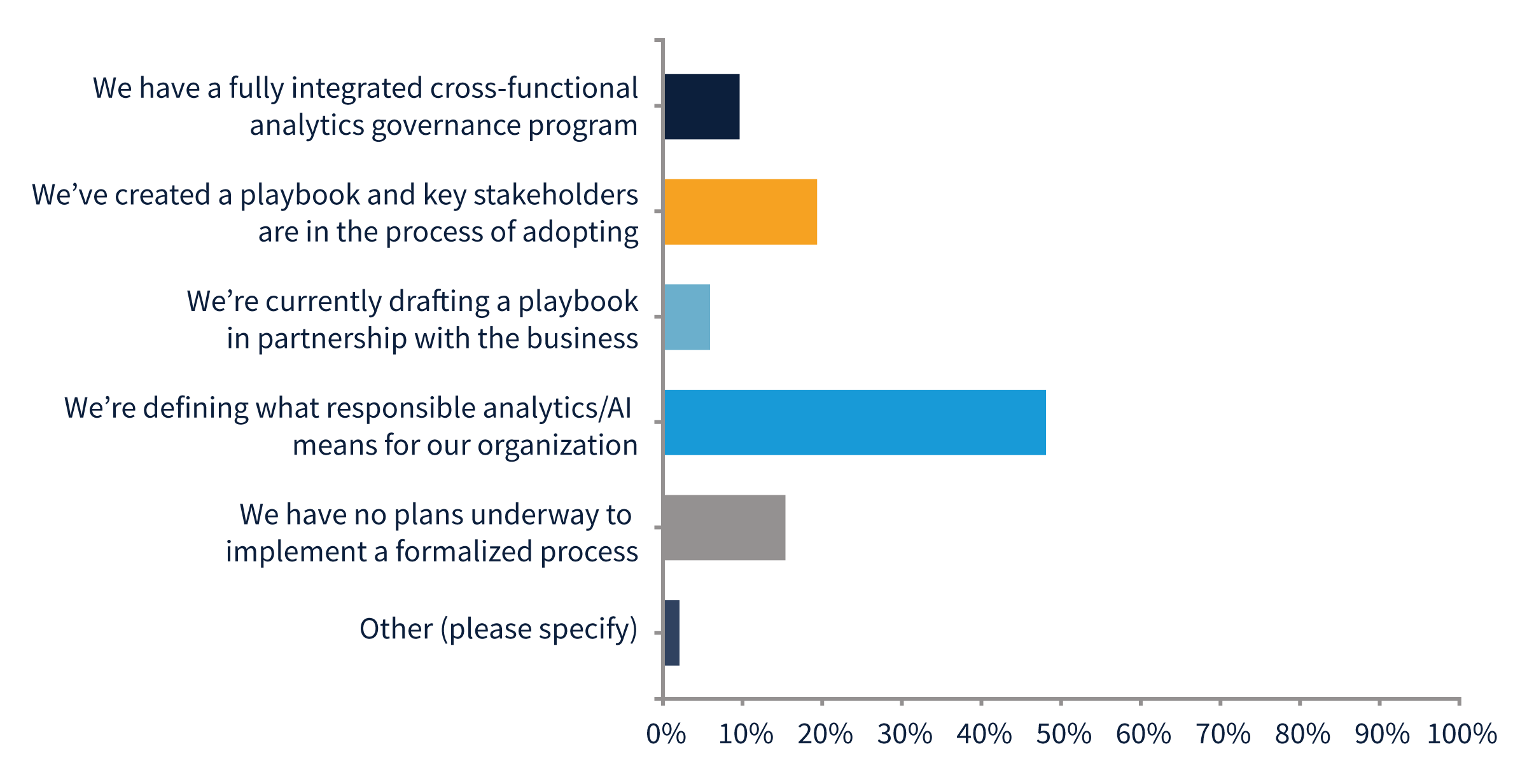

The majority of respondents indicated that their organization has done some work in this area.

- Approximately 15% of respondents indicated that there are no plans to create a formalized process for the responsible development and deployment of AI. While we would like to see a lower number here, it still signals the fact that most organizations are prioritizing responsible analytics governance in some way.

- 55% stated that they are in the formation process. This could be the early stages of defining a process, or currently drafting a playbook.

- 20% have created a playbook and are working toward adoption.

- Finally, 10% are at the top of the class, with a fully integrated program.

Of those that have implemented a process or are in the process of doing so, we asked respondents which ethical considerations they consider when designing or implementing AI systems.

- The majority (>80%) indicated Privacy and Security.

- The majority (>70%) also indicated Bias and Discrimination.

- Less than 20% indicated Job Displacement.

- Some also mentioned explainability, transparency, accountability, and industry specific regulations.

Of those that have implemented a process or are in the process of doing so, we asked how their organization ensures that AI systems appropriately account for bias.

- The most popular answer, coming in at just over 40%, was through “regular monitoring and testing.”

- The second most popular answer was “adhering to strict ethical standards” at just under 30%.

- Small percentages of respondents indicated

- “Implementing fairness algorithms”

- “Independent review boards”

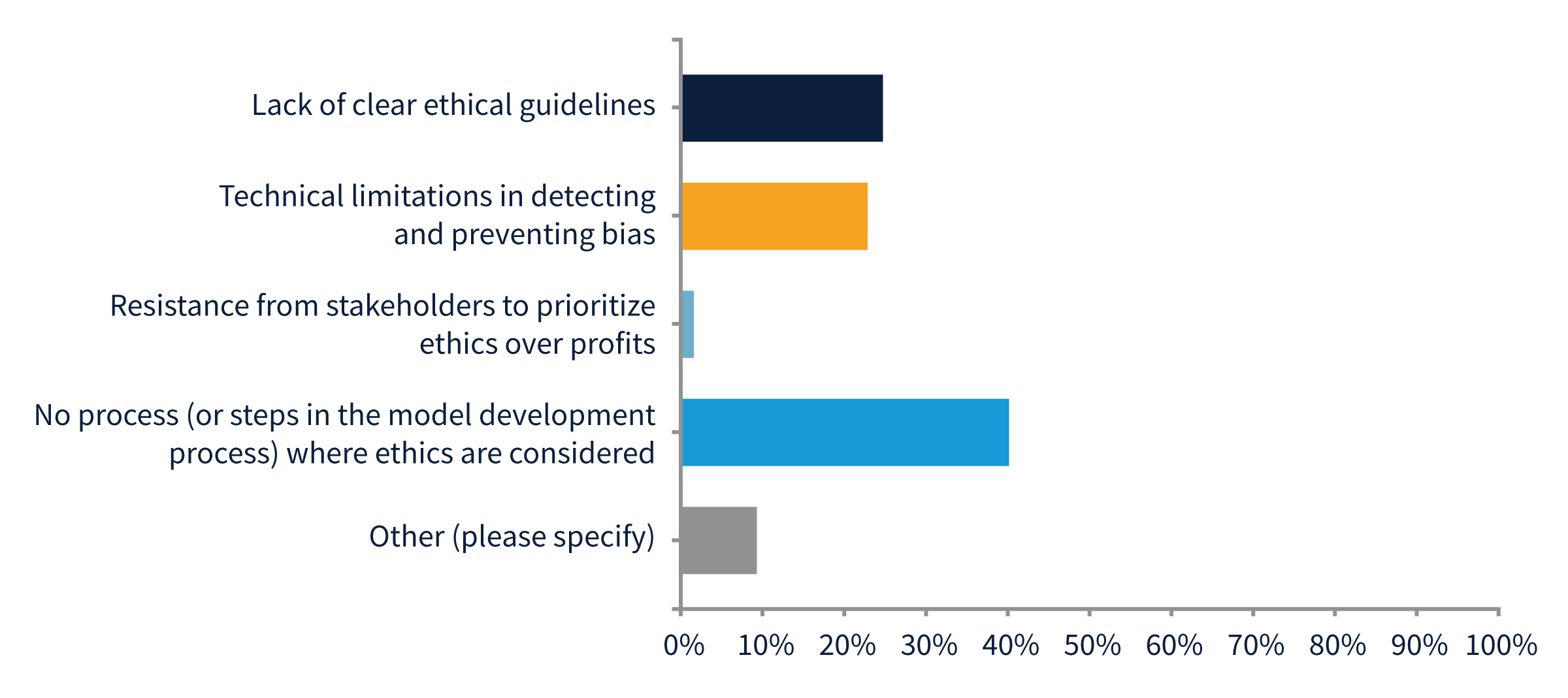

We also asked about the biggest challenge in ensuring the responsible use of AI in data and analytics:

- 43% stated that the biggest challenge in ensuring the responsible use of AI in data and analytics is that there is no process (or steps in the model development process) where ethics are considered.

- Just over 20% indicated a “lack of clear ethical guidelines.”

- Just over 20% also indicated “technical limitations in detecting and preventing bias.”

- Only a single respondent indicated they had run into resistance from stakeholders, where there was a priority of results over ethics.

- Several lamented about a lack of awareness by their business partners. In other words, this isn’t even on their radar.

Only approximately 20% said they were somewhat/very confident that the existing laws and regulations will be able to address AI-related harm and consequences.

65% said they are somewhat/very confident in their ability to address AI-related concerns at work.

Preparing for AI Regulations Today (and Tomorrow)

If you are looking for a place to start preparing for future legislation, here are a few ideas:

- Ensure that you have a process in place to check for ethical concerns.

- Our survey indicated that lack of process was a major barrier. This is likely something that could be incorporated into the existing development process today.

- Having a process will also ensure that those unaware of these concerns are brought into the discussion.

- Use a “process by design” approach. Responsible analytics governance means true process integration into everyday business processes.

- Key questions to consider when integrating ethics into data frameworks and business models include:

- Do you manage policies on data and algorithm ethics based on business needs, ethical principles, and socially good outcomes?

- Do you assess and advise business initiatives and projects on their ethical data and algorithm lifecycle management including acquisition, development, and usage and monitoring?

- Are you able to execute audits of algorithmic processes and meet up with legislative demands at low cost?

- Can you govern the lifecycle and procurement of analytics and AI models and are you developing practices/tooling in a way that aligns with ethical principles?

- Build a coalition that believes this is an important topic.

- In the webinar, Onyi stated how important it was to build a coalition in your organization that believes building an AI ethics program is the right thing to do. Be sure to cast a wide net in terms of stakeholders.

- Think and act beyond regulatory requirements. In building this coalition, you’re taking proactive steps to protect your brand, your customers, and to promote the values of your company.

- Before going any further, address why your organization needs an analytics program in the first place and the aspirational goals for such an endeavor.

- Keep an eye on the major pieces of legislation.

- This is a rapidly changing space. For example, the EU AI Act is meant for high-risk systems. It’s unclear whether this pertains to ChatGPT. Microsoft is arguing that it does not.

- Given this rapidly evolving space (and it will always be rapidly evolving), no analytics governance playbook will keep up with the technology and reactive regulations. Instead, define the digital ethical principles that are unique to your company and create a clear and flexible governance framework that covers people, process, and technology