In a results-focused environment, should we measure the performance of data science teams solely by their impact? The short answer is yes, but with some adjustments.

Why Measure Productivity?

Measuring productivity in data science teams is not trivial. It’s crucial for a data-driven company. The board needs visibility into the efficiency of their teams, and if in the tech sector, of their IT team. On the other hand, leaders need to measure productivity to allocate resources, evaluate team and project profitability, and even assess the individual performance of team members.

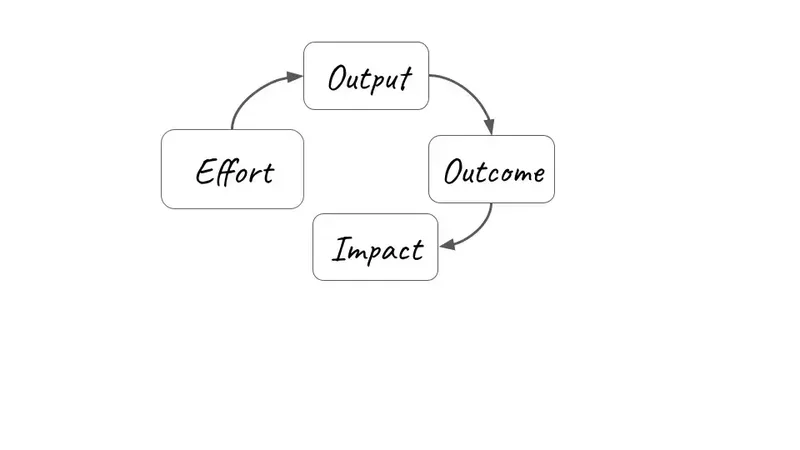

In this article, we share our experience leading these teams at Mercado Libre, showcasing the mental model (Effort → Output → Outcome → Impact) that has helped us maintain high-performing teams that generate value.

Directing a data science team in a dynamic giant such as Mercado Libre (Meli) and in a highly competitive sector poses a series of significant challenges. Even small companies or those just starting to manage their data need to measure their productivity using data science.

Yet, before tackling the productivity dilemma, it’s essential to place ourselves in the context of data science, machine learning or artificial intelligence, however you prefer to call it. Although this field has seen significant growth in recent years, it’s still in its infancy when compared to more established sectors such as finance or construction. Ever since its inception in 1962, we’ve witnessed its rapid evolution, especially in recent months, driven in part by evolution of artificial intelligence technologies like generative AI.

Productivity in Data Science

Given the ongoing evolution and development of data science, a crucial challenge emerges: measuring productivity in this burgeoning field. Although data science might be one of the most innovative and rapidly evolving areas of research in the 21st century, it is still in the early stages of development. Thus, we find ourselves in a ceaseless process of evolution, looking up to our elder sibling, software engineering, as a reference for adopting best practices.

Measuring productivity in software development remains a challenge, despite it being a more established field. For instance, a project might have code that is correctly written and delivered on time, but if it fails to meet the end user’s needs, one could argue that it hasn’t been productive. Striking a balance between delivery speed and the quality of the outcome is a common obstacle. Even McKinsey & Company has only recently begun discussing this topic.

Compared to more traditional fields, productivity measurement in data science seems to be a multi-headed beast. One particular difficulty includes evaluating the quality of data analysis. In data science, quality isn’t always directly proportional to the amount of time spent on the task, as a significant amount of time can be spent cleaning and preparing data before any meaningful analysis can be done. Therefore, measuring productivity requires a thorough understanding of the data science process and a valuation of each step, even that which doesn’t produce direct results. Further, there is no single metric that can encompass its entire complexity, and if you look for literature on the subject, you’re likely to end up practically empty-handed.

Due to their highly collaborative and intricate nature, data science products require a comprehensive set of metrics encompassing technical aspects (such as AUC, precision, F1-Score), business-related factors (like churn, revenue), and even development measures (quality, speed, and maintainability). These metrics are instrumental in gauging product effectiveness at different levels. Hence, it is crucial to grasp the multifaceted nature of this field to lead our teams effectively in this fast-paced and competitive environment.

Mental Model

Successfully navigating the turbulent waters of data science requires, both for large players and for smaller scale companies, an optimal level of adaptability in the organization’s strategy. In the dynamic environment of Mercado Libre, our strategy is driven by a results-oriented approach per quarter, an experience that proves valuable for both giants and startups. This approach implies careful management and prioritization of projects, lessons that we will share focusing on our experience at Meli, which we firmly believe is transferable to any scale of operation.

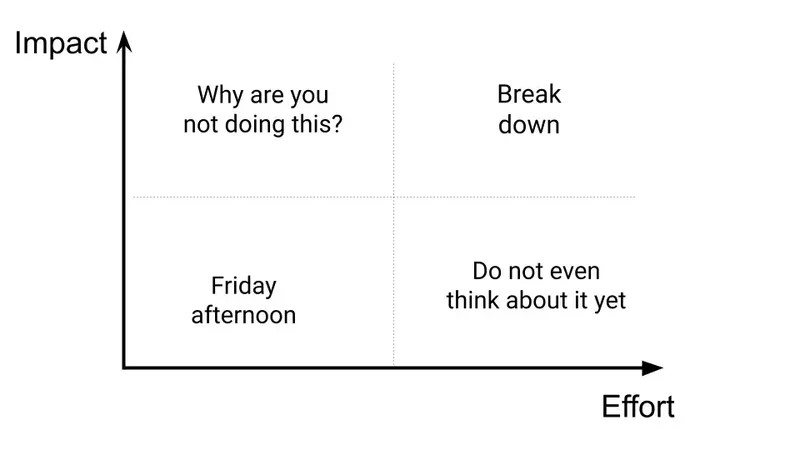

In this scenario, the initial strategy was to prioritize projects based solely on potential impact. It seemed a logical choice at the time — we selected projects based on estimated benefit and adjusted the scope according to available resources. However, it wasn’t long before we realized that this strategy was not as effective as we had hoped. Projects turned out to have less impact than expected, with benefits often being overestimated or misaligned at the time of implementation. Additionally, the required effort for some other projects was underestimated.

This journey led us to rethink our strategy. After several iterations, we devised a mind map that would help us tackle these ubiquitous challenges and provide us with a reliable framework. We drew inspiration from an existing model in the world of software engineering, tailoring it to the requirements of the fintech data and analytics team at Meli and, indeed, the entire data science discipline. This model succinctly encapsulates four stages.

It soon became clear that the success of data science projects relies not only on the team of developers but also on the implementation team. Just as in a football match, where victory demands the collaborative effort of defenders, midfielders, and forwards, the success of a data science project hinges on a cohesive team striving toward a shared goal.

Prioritizing Analytics Framework

Analytics resources are scarce and the demands on those resources are ever increasing, so it’s critical to have a clear, transparent, and intentional method to source and execute the analytics projects that will secure business value, and, as a result, meet or exceed the expectations of business leaders.

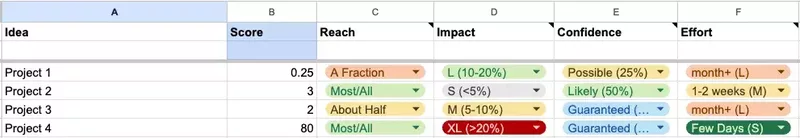

Rice Framework

Another advantage of this mind map is its synergy with the RICE (Reach, Impact, Confidence, Effort) method. This strategic framework doesn’t just offer a powerful tool for prioritizing projects; it also enhances our approach of linking impact to effort, and adds the concept of confidence. Here, confidence refers to our degree of certainty about whether we can successfully execute the proposed solution. For instance, a project with a clear and agreed-upon implementation path naturally instills a higher degree of confidence than a project that may have external dependencies that could potentially hinder its execution. Furthermore, the RICE method considers reach, which is the scale of the expected benefit of the solution, whether it’s localized to a particular country or region, targeted at a specific segment, or intended to cater to a broad user base.

Employing the mind map and RICE framework also paves the way for the creation of a data-based index (DBI). This index enables us to measure and rank projects based on their alignment with corporate goals, resource efficiency, and confidence in implementation. Consequently, we can objectively compare projects — those with higher DBI scores are typically more likely to succeed.

(Reach * Impact * Confidence) / Effort

The DBI offers a transparent blueprint for decision-making, primarily focusing on project prioritization and execution. It enhances not only our ability to deliver impactful results, but also optimizes the use of resources. In promoting profitability, this framework assures our journey toward success.

Step-by-Step Implementation

Step 1: Understand the Context and Set Objectives

Tasks to perform: Clearly identify the pain points, opportunities, or problems you want to solve. Fall in love with the problem, not the solution.

Definition of objectives: Set clear goals for your team or project, aligned with the organization’s objectives.

Step 2: Mental Model — Effort→Output→Outcome→ Impact

Effort: Assess the resources and time needed for each project.

Output: Define what deliverables or products are expected from the project.

Outcome: Consider how these deliverables will impact the broader objectives of the company. <Validate with stakeholders>.

Impact: Measure the long-term effect of the project on the organization. <Validate with stakeholders>.

Step 3: Implementation of the RICE Framework

Reach: Evaluate how many people or what segment of your target population will be affected by the project.

- Example: It reaches 40% of our users.

Impact: Estimate the degree of change or benefit the project will bring. You can do this quantitatively or qualitatively (high, medium, low impact)

- Example: The project will generate a benefit of U$S 5M in 4 months (quantitative)

- Example: Scale of 1–10: 1→ Very low; 10 → Very high (qualitative)

Confidence: Consider your level of certainty about the project’s viability and results.

- Example: Evaluate the readiness of the implementation team to use the tool. Do they have an implementation plan? Do we have specific dates to use the model? Ask: If we had the model today, what immediate actions could we take? Do we have any dependencies to use the model?

Effort: Calculate the amount of work and resources needed.

- Example: Two data scientists will be required for three months. You can also measure the effort qualitatively: High, Medium, Low.

Use the RICE formula to rank and prioritize projects.

(Reach * Impact * Confidence) / Effort

Step 4: Productivity Measurement and Analysis

Analysis of each step: Evaluate each phase of the data science project lifecycle, including data preparation, analysis, modeling, production, monitoring, etc.

Implementation of appropriate metrics: Choose relevant business metrics and techniques to measure success, such as AUC, F1-Score, churn, and revenue.

Step 5: Adaptability and Project Management

Agile Management: Adapt management strategies to be agile and respond to changes quickly. Adopt an incremental development mindset, always starting with a minimum viable product (MVP) and iterating along the way. You should have a clear definition of “done” (the finished product). The MVP is considered complete when the model’s accuracy reaches 74%.

Flexible prioritization: Adjust project priorities according to the changing context and the results obtained. Prioritize “quick wins.”

Step 6: Continuous Learning and Improvement

Constant iteration: Use results and feedback to continuously improve processes and strategies.

Learning and development: Foster an environment of constant learning within the team. Share the results with other developers to receive feedback.

Step 7: Practical Application and Case Studies

Study real cases: Analyze previous projects such as the lead generation project example to understand the challenges and solutions applied.

Apply in real projects: Implement these strategies in an ongoing project to observe their effectiveness.

Step 8: Evaluation and Adjustment

Regular revision: Regularly evaluate project progress to ensure it is aligned with the objectives. Use the OKR (objectives and key results) format.

Adjustments based on results: Make changes in the strategy or execution based on the results obtained and lessons learned.

As seen, successful leadership in data science extends far beyond technical skills; it demands well-defined strategies and meticulous execution. I suggest following the actionable steps presented above to empower your team, and applying this model and the RICE framework not only to enhance your team’s productivity and growth but also to ensure their work is in line with your organization’s goals. Tailor these steps to your needs to navigate through obstacles and guide your team toward a prosperous and successful future.

Conclusion: An Effective and Adaptable Approach for Data Science Teams

In short, steering a prolific data science team to success transcends conventional gauges of effort and impact. Our experience at Mercado Libre has taught us the importance of considering more nuanced factors. This includes aligning our objectives with those of the organization, using resources efficiently, and striking a balance between the team’s effort and the results achieved. Nevertheless, the relevance of these lessons extends beyond our own experience.

The conceptual model we advocate — Effort → Output → Outcome → Impact — is far more than a simple theory. It was born out of experience and has proven effective in practice.

This model has rapidly evolved into an essential tool in our work, aligning the expectations of all stakeholders, from the developers themselves to the end-users. Pairing this model with the RICE framework led to a drastic shift in how we prioritize projects. By adopting an approach that emphasizes efficacy and efficiency rather than generic productivity metrics, data science has emerged as a beacon of innovation and success in today’s competitive tech landscape.

The effectiveness of this methodology extends beyond theory; its application has substantially boosted our performance, strengthening the productivity of our teams, facilitating data-driven decision-making, and optimizing project execution. The repertoire of strategies and tactics we have devised is both versatile and adaptable, making it suitable for any organization working with data science teams, regardless of their size or scope. The key to its success lies in its universal applicability and adaptability. This approach has been validated in various situations, from the prioritization of machine learning models to the construction of ETLs or dashboards. It has proven equally effective in leading teams to success in diverse environments, ranging from tech giants to emerging startups.

To crystallize the power of this framework, we leave you with a real-world example:

In a lead generation project for a business vertical, our main objective was to increase our active user base by employing precise prospect selection to optimize telesales and boost the conversion rate. However, we encountered an unexpected challenge: measuring this success metric presented a significant challenge. We relied on one or more call centers, which involved a manual process prone to errors, leading to over a month’s delay in obtaining results. This situation hampered our ability to iterate over our lead bases and accurately assess the project’s performance, thus hindering the identification of improvement opportunities.

Despite extending the project beyond what was planned, the outcome did not fully meet our expectations. Ultimately, we chose to analyze customers acquired through the channel in question during the lead generation period, and measured the volume of transactions generated in their first few months. This approach allowed us to attribute the impact directly to the model.

This experience highlights the importance of not only correctly choosing the metric that defines the success of the project, but also ensuring it can be measured with ease. Furthermore, it underscores the need to consider proper implementation preparation and impact measurement. The choice of the metric should resonate with the context, be it a business-oriented metric like revenue or churn rate, or a product-specific metric such as conversion rate or activation. Contemplating these aspects is crucial when prioritizing data science projects.

References

McKinsey & Company. "Yes, You Can Measure Software Developer Productivity." Technology, Media & Telecommunications Insights. Last modified August 17, 2023. https://www.mckinsey.com/indus....

Orosz, Gergely, and Kent Beck. “Measuring Developer Productivity.” The Pragmatic Engineer. Last modified August 29, 2023. https://newsletter.pragmaticen....

Originally published in Mercado Libre Tech.