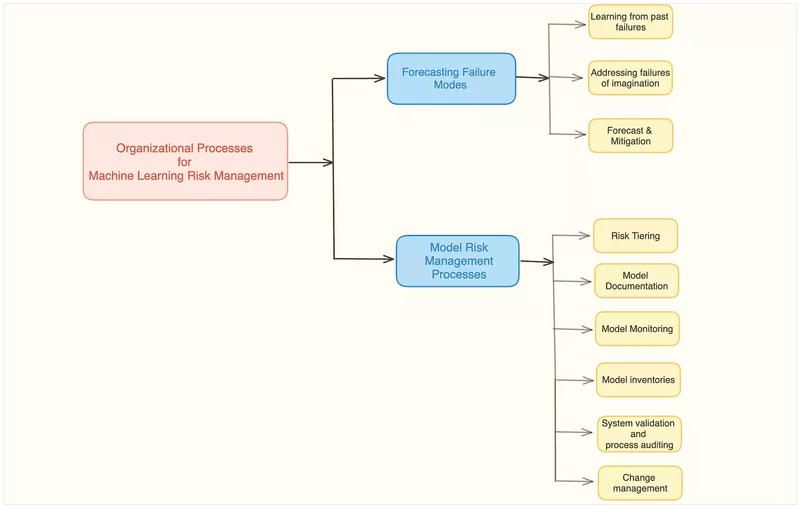

Organizational processes are a key nontechnical determinant of reliability in ML systems.

In our ongoing series on machine learning risk management, we've embarked on a journey to unravel the critical elements that ensure the trustworthiness of machine learning (ML) systems. In our first installment, we delved into “Cultural Competencies for Machine Learning Risk Management,” exploring the human dimensions required to navigate this intricate domain. The insights presented therein lay the foundation for our current exploration and I highly recommend that you go through part 1 before continuing with this article.

In this second article, we pivot our focus to another vital element in the context of ML systems: Organizational Processes. While technical intricacies often overshadow these processes, they hold the key to guaranteeing the safety and performance of machine learning models. Just as we recognized the significance of cultural competencies, we now acknowledge that organizational processes are the foundational cornerstone upon which the reliability of ML systems is constructed.

This article discusses the pivotal role of organizational processes in the realm of machine learning risk management (MRM). Throughout the article, we emphasize the criticality of practitioners meticulously considering, documenting, and proactively addressing any known or foreseeable failure modes within their ML systems.

1️. Forecasting Failure Modes

While it is crucial to identify and address possible problems in ML systems, turning this idea into action takes time and effort. However, in recent years, there has been a significant increase in resources that can help ML system designers predict issues more systematically. By carefully sorting out potential problems, making ML systems stronger and safer in real-world situations becomes easier. In this context, the following strategies can be explored:

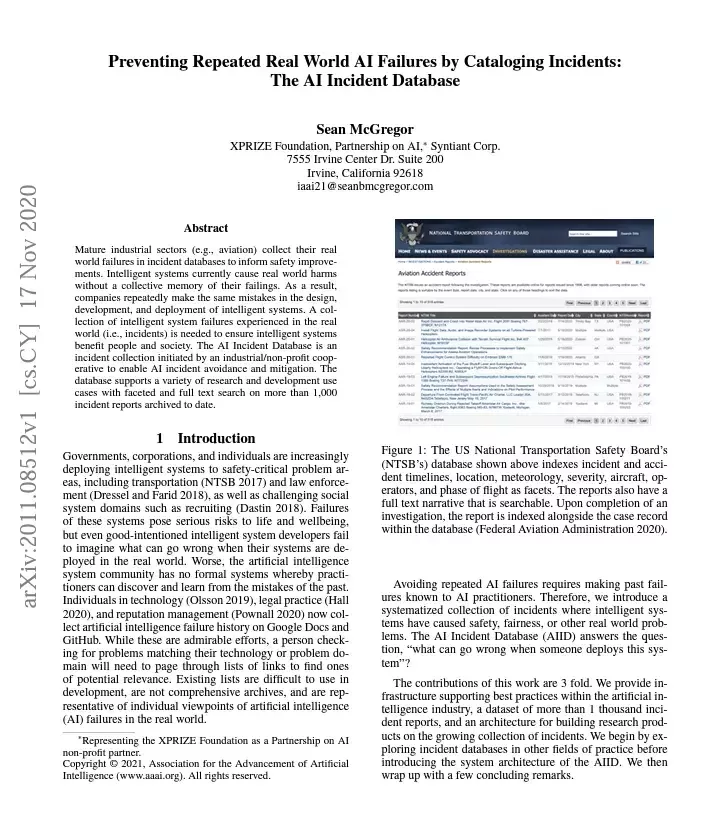

Learning from past failures

Much like how transportation professionals investigate and catalog incidents to prevent future occurrences, ML researchers and organizations have started collecting and analyzing AI incidents. The A.I. Incident Database, which we also brought up in the last article, is a prominent repository that allows users to search for incidents and glean valuable insights. When developing an ML system, consulting this resource is crucial.

If a similar approach has caused an incident in the past, it serves as a strong warning sign that the new system may also pose risks, necessitating careful consideration.

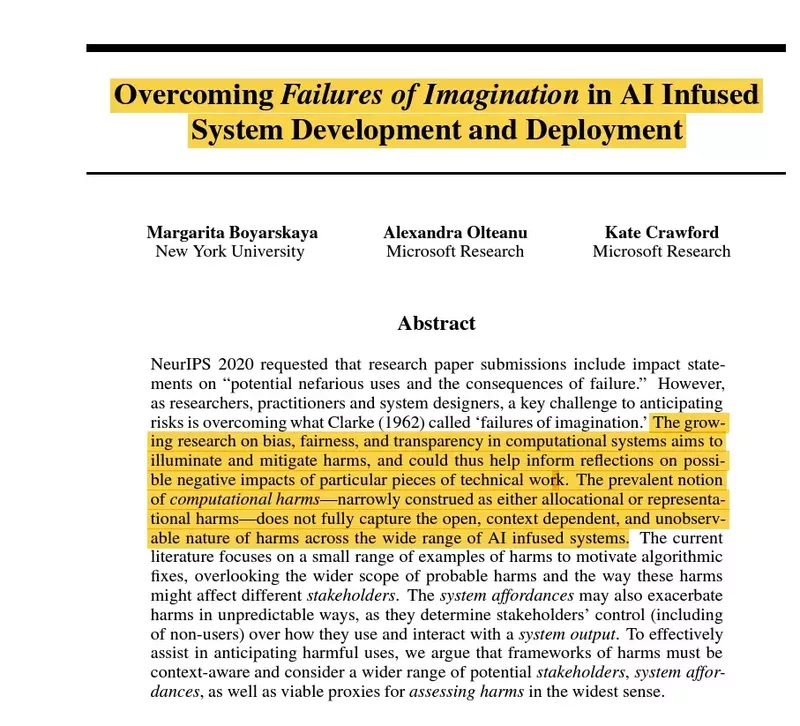

Addressing failures of imagination

Often, AI incidents stem from unforeseen or poorly understood contexts and details of ML systems' operations. Structured approaches outlined in the paper "Overcoming Failures of Imagination in AI-Infused System Development and Deployment" offer ways to hypothesize about these challenging future risks, in addition to considering aspects such as "who" (including investors, customers, and vulnerable nonusers), "what" (covering well-being, opportunities, and dignity), "when" (including immediate, frequent, and long-term scenarios), and "how" (involving actions and belief alterations) related to AI incidents.

While AI incidents can be embarrassing, costly, or even illegal for organizations, foresight can mitigate many known incidents, potentially leading to system improvements. In such cases, the temporary delay in implementation is far less costly than the potential harm to the organization and the public from a flawed system release.

2. Model Risk Management Processes

Machine Learning Risk Management (MRM) constitutes a comprehensive framework and a series of procedures designed to identify, assess, mitigate, and monitor risks associated with developing, deploying, and operating machine learning systems. These elements form a significant part of the governance structure, particularly within the context of the U.S. Federal Reserve's Supervisory Guidance on Model Risk Management (S.R. 11–07), which oversees predictive models employed in substantial consumer finance applications.

Documentation is where compliance becomes tangible, holding practitioners accountable and guiding them to build robust models.

While complete MRM implementation may be more attainable for larger organizations, smaller ones can also extract valuable insights. This section dissects MRM procedures into smaller, more manageable components, making them easier to understand and use.

1. Risk Tiering

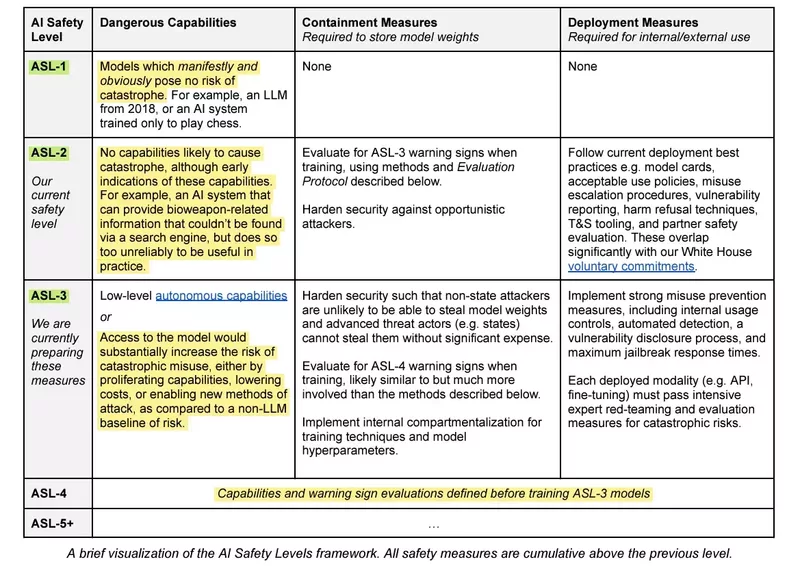

When evaluating risk in ML system deployment, a common approach involves calculating materiality considering the likelihood of harm and anticipated loss. High-materiality applications demand greater attention. Effective governance appropriately allocates resources to high, medium, and low-risk systems. For example, the recently released Anthropic A.I.'s Responsible Scaling policy introduces the concept of AI safety levels (ASL), loosely inspired by the U.S. government's biosafety level (BSL) standards for managing hazardous biological materials. Here is a snapshot of the same:

2. Model Documentation

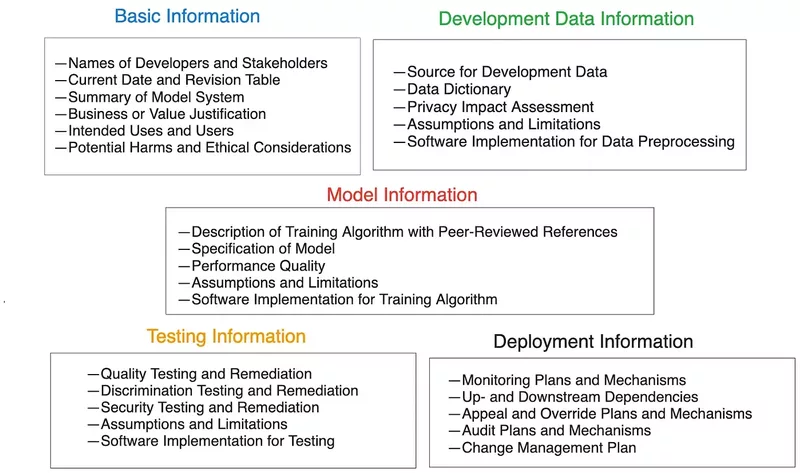

MRM standards require comprehensive system documentation, fulfilling multiple purposes, including stakeholder accountability, ongoing maintenance, and incident response. Standardized documentation across systems streamlines audit and review processes, making it a critical aspect of compliance. Documentation templates guide data scientists and engineers throughout the model development process, ensuring that all necessary steps are conducted to construct a reliable model. Incomplete documentation indicates inadequacies in the training process, as most templates require practitioners to fill in every section. Furthermore, including one's name and contact information in the final model document fosters accountability.

Extensive model documentation can be daunting; smaller organizations can opt for simpler frameworks like datasheets, and model cards can assist smaller organizations in achieving these objectives.

3. Model Monitoring

The foundation of ML safety rests on the intrinsic unpredictability of ML system behavior in real-world contexts, necessitating the continuous surveillance of these systems from their deployment until their decommissioning. A principal concern revolves around input drift, which emerges when real-world circumstances deviate from the static training data due to factors like market fluctuations, regulatory alterations, or unexpected occurrences like pandemics. This divergence poses a potential threat to the system's functionality.

Efficient ML systems reduce these risks by carefully monitoring for changes in data and model quality. This monitoring goes beyond just performance; it also looks for unusual data or predictions, security problems, and fairness issues. This helps ensure thorough risk management in a changing operational setting.

4. Model Inventories

At the heart of MRM is the model inventory — comprehensive databases that list all of an organization's ML systems and connect to essential resources like monitoring strategies, audit results, system maintenance records, and incident response plans.

Any organization that is deploying ML should be able to answer straightforward questions like:

For any organization serious about leveraging ML, maintaining a robust model inventory isn't just a good practice — it's a necessity.

5. System Validation and Process Auditing

An essential aspect of traditional MRM practice is that it undergoes two primary evaluations before an ML system is released. First, experts test its technical parts, finding and fixing issues. Next, a team ensures the system follows all rules and guidelines, including its design and future plans. These systems are reviewed again if they have big updates. Some smaller companies might feel they can only do some of these checks, but the basic idea is to have someone outside the design team test it, ensure it meets all rules, and get approval before using essential systems.

Effective MRM in machine learning means dual scrutiny.

6. Change Management

ML systems, like other complex software, have various parts like backend code, APIs, and user interfaces. Changes in one component can affect others. Challenges like shifting data patterns, new privacy laws, and reliance on third-party software make managing these changes crucial. In the early stages of a critical ML system, planning for these changes is essential. Without this planning, mistakes, such as using data without permission or system mismatches, can occur and might go unnoticed until problems arise.

Conclusion

From cultural competencies to organizational processes, we've explored various aspects that contribute to ensuring the safety and performance of machine learning systems. As we've seen, forecasting failure modes, meticulous model risk management, and vigilant monitoring are key pillars in this journey. Building robust models, maintaining comprehensive inventories, and embracing change management are crucial steps toward responsible AI. These foundational elements not only ensure the safety and performance of the ML systems but also the trust of their users and stakeholders.

This article was originally published in Towards Data Science.