An organization's culture is an essential aspect of responsible AI.

A Note on the Series: As we embark on this series, it’s important to provide context. I am one of the co-authors of Machine Learning for High-Risk Applications, along with Patrick Hall and James Curtis. This series is designed to offer a concise, reader-friendly companion to the book’s extensive content. In each article, we aim to distill the critical insights, concepts, and practical strategies presented in the book into easily digestible portions, making this knowledge accessible to a broader audience.

In the race for progress, we must tread carefully, for haste in engineering and data science can shatter more than just code.

Imagine a world where artificial intelligence (AI) powered systems could do no wrong, where they flawlessly executed their tasks without a glitch. Sounds like a sci-fi dream, doesn't it? Welcome to the real world of AI, where things don't always go as planned. An integral part of responsible AI practice involves preventing and addressing what we term “AI incidents.” This article discusses cultural competencies that can prevent and mitigate AI incidents, focusing on the concept of promoting responsible AI practices. Subsequently, we will explore related business processes in future articles to provide a comprehensive perspective on this crucial topic.

Defining AI Incidents

Addressing AI incidents is crucial before delving into machine learning (ML) safety because we can't effectively mitigate what we don't comprehend. AI incidents encompass any outcomes stemming from AI systems that could potentially cause harm. The severity of these incidents naturally varies depending on the extent of damage they result in. These incidents could range from relatively minor inconveniences, such as mall security robots tumbling downstairs, to more catastrophic events, like self-driving cars causing pedestrian fatalities and the large-scale diversion of healthcare resources away from those in dire need.

AI incidents encompass any outcomes stemming from AI systems that could potentially cause harm.

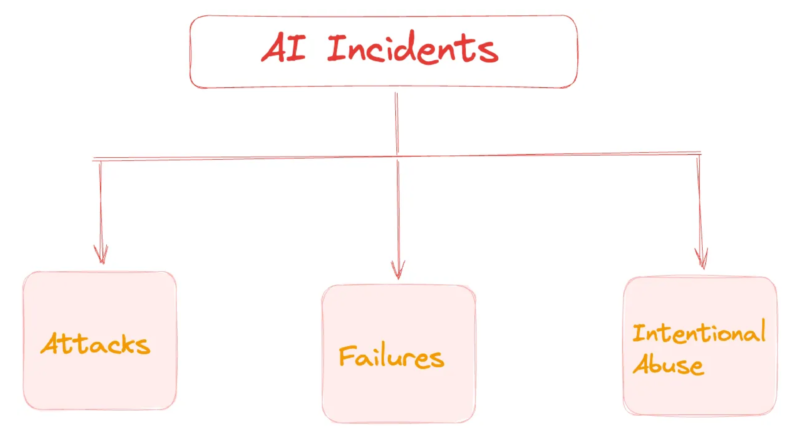

We can categorize the AI incidents into three major groups:

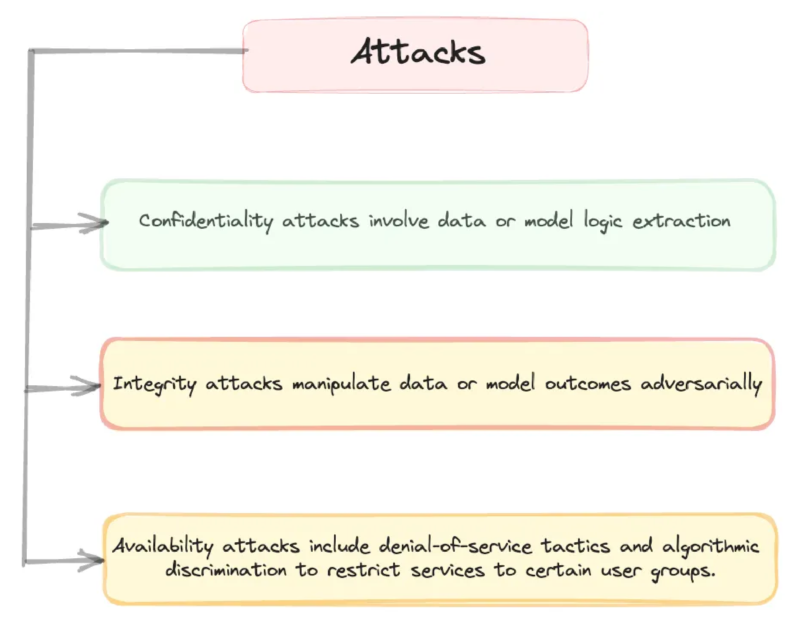

Attacks: Many parts of machine learning systems, like software and prediction tools, are vulnerable to cyber and insider attacks. Once an attack happens, we lose control, and the attackers may have their own goals related to accuracy, bias, privacy, reliability, and more. Researchers have extensively documented such attack categories, namely confidentiality, integrity, and availability attacks.

Failures: Failures refer to issues within AI systems, which often include problems like algorithmic bias, lapses in safety and performance, breaches of data privacy, a lack of transparency, or shortcomings in third-party system components.

Abuses: AI tools can be abused by people with malicious intent. Hackers often use AI to enhance their attacks, such as in autonomous drone strikes. Additionally, some governments employ AI for purposes like ethnic profiling, highlighting the widespread misuse of AI technology.

Cataloging AI Incidents: The AI Incident Database

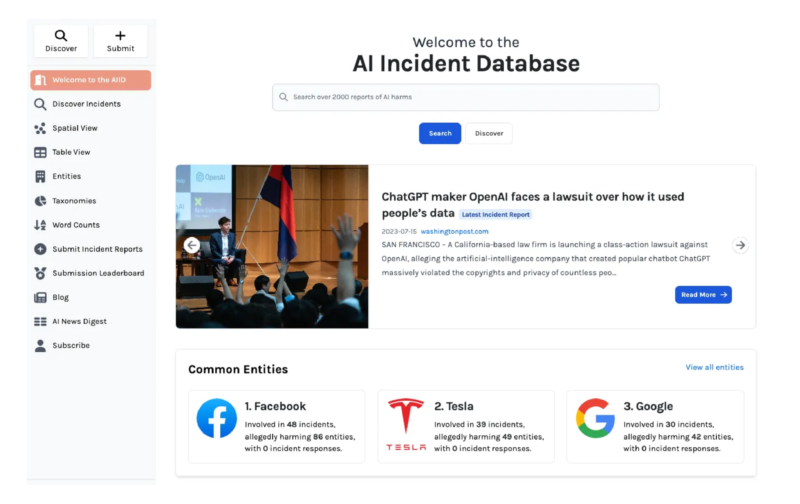

AI incidents can act as catalysts for promoting responsible technological progress within companies. When developing machine learning systems, it's crucial to cross-reference current plans with past incidents to avert potential future occurrences proactively. This aligns with the primary objective of ongoing endeavors to establish AI incident databases and their corresponding publications. An excellent example of such an endeavor is the AI Incident Database.

As stated on the AI Incident Database website, their mission is clear:

"The AI Incident Database is dedicated to indexing the collective history of harms or near harms realized in the real world by the deployment of artificial intelligence systems. Like similar databases in aviation and computer security, the AI Incident Database aims to learn from experience so we can prevent or mitigate bad outcomes."

The underlying idea here is that, like other domains, AI can also greatly benefit from learning from past mistakes to avoid their recurrence in the future. To achieve this effectively, it is imperative to maintain an accurate record of these failures.

Mitigating ML Risks Through Cultural Competencies

The culture within an organization plays a pivotal role in ensuring responsible AI practices. This article will mainly explore some cultural strategies to achieve this goal. In upcoming articles, we will also delve into additional methods for mitigating AI-related risks, including business processes and the model risk management aspects. Validation, auditing, and incident response teams are crucial alongside developers.

1. Organizational Accountability

Achieving responsible AI practices in organizations hinges on accountability, culture, and adherence to standards like Model Risk Management (MRM). Without consequences for ML system failures, attacks, or misuse, safety and performance may be overlooked. Key cultural tenets for MRM include written policies and procedures, rigorous assessment from independent experts, accountable leadership (e.g., Chief Model Risk Officer), and aligning incentives for responsible ML implementation and not just speedy development.

Small organizations can designate individuals or groups for accountability to prevent incidents and reward successful systems. Collective accountability can lead to no one being responsible for ML risks and incidents.

It’s important to have an individual or group held accountable if ML systems cause incidents and rewarded if the systems work well. If an organization assumes that everyone is accountable for ML risk and AI incidents, the reality is that no one is accountable

— Machine Learning for High-Risk Applications, Chapter 1.

2. Culture of Effective Change

A strong culture of effective change involves actively questioning and scrutinizing the various steps involved in developing ML systems. In a broader organizational context, promoting a culture of serious inquiry into ML system design is essential. Such a culture increases the likelihood of developing successful ML systems and products while also preventing problems from escalating into harmful incidents. It's worth noting that ML assessments should always be constructive and respectful, applying uniformly to all personnel involved in ML system development.

Effective assessment fuels innovation and safeguards progress in ML development

To implement effective assessments, it should be structured. This could involve regular meetings, perhaps on a weekly basis, where current design decisions are critically examined and alternative design choices are thoughtfully considered. This structured approach helps ensure that the culture of ML assessment becomes integral to the organization's ML development process.

3. Diverse and Experienced Teams

Diverse teams are crucial to unlocking fresh perspectives in designing, developing, and testing ML systems. Numerous examples illustrate the adverse outcomes of data scientists overlooking ML systems' demographic diversity. Increasing demographic diversity within ML teams is a potential remedy to address these oversights.

In diversity we find innovation, and in expertise we ensure safety, together they are the compass guiding responsible AI advancement.

Dismissing domain experts is a dangerous gamble, as they bring invaluable insights and act as a safety net, averting potential disasters arising from misinterpretations of domain-specific data or results. The same principle applies to social science experts. Countless instances demonstrate the perils of sidelining these experts, whether by attempting to automate decisions requiring specialized knowledge or ignoring their collective wisdom entirely in AI projects.

4. Drinking Our Own Champagne

“Drinking our own champagne” is the practice of testing our own software or products within our organization, akin to “eating our own dog food.” It's a pre-alpha or pre-beta testing method that helps uncover deployment complexities before they impact customers or the public. This approach is crucial for identifying elusive issues like concept drift, algorithmic discrimination, shortcut learning, and underspecification that often elude standard ML development processes.

If it’s not suitable for our organization, it may not be ready for deployment. It’s a sip before you serve.

5. Moving Fast and Breaking Things

In the realm of engineering and data science, the fervent mantra of “move fast and break things” often takes center stage. Nevertheless, this approach can be dangerous, especially when applied to vital ML systems such as autonomous vehicles, finance, healthcare, and beyond. Even a minor glitch could lead to significant harm on a large scale.

In the race for progress, we must tread carefully, for haste in engineering and data science can shatter more than just code.

It's crucial to shift our mindset to steer clear of such risks. Rather than solely concentrating on model accuracy, we should also prioritize understanding the implications and potential risks associated with our work.

Conclusion

In conclusion, our exploration of AI incidents, cultural competencies, and risk mitigation strategies underscores the critical importance of responsible AI development. Cultivating a culture of effective assessment and accountability within organizations ensures a proactive stance in identifying and rectifying potential pitfalls. Furthermore, integrating diverse and experienced teams and rigorous in-house testing practices strengthens the foundation for responsible AI implementation. Lastly, there is a necessity to prioritize comprehensive risk assessment over haste. These measures collectively guide us toward a future where AI systems are not only technologically advanced but also ethically sound, serving as valuable tools for the betterment of society.

This article was originally published in Towards Data Science.