Machine Learning Lifecycle

In this series on the machine learning lifecycle, we’re approaching the topic from end to start. In the last article, we explored the intricacies of model deployment—the final stage of the ML lifecycle. In this article, we’ll explore model selection and training.

Model Evaluation: How to Analyze Errors and Improve Performance

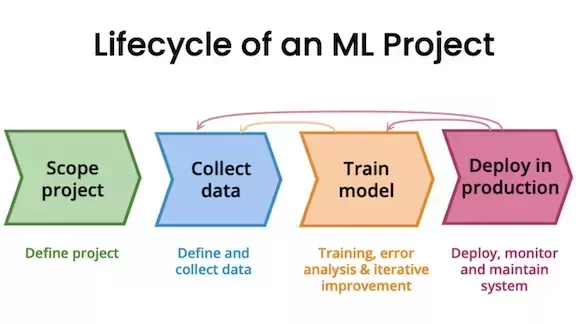

The overall process of a machine learning project is iterative. We repeat the same steps repeatedly in search of improving model performance and minimizing problems before and after the deployment.

Suppose you’re familiar with academic research or Kaggle competitions. In these cases, data scientists and machine learning engineers tend to focus on testing different ML models while iteratively tweaking several hyperparameters to achieve the best performance possible. However, even though this model-centric approach is common in academic and online competitions, the data-centric approach tends to be more efficient and adequate for real-world scenarios. In this approach, high-quality data is key and the main focus lies in improving the quality of the dataset and the information it contains via feature engineering, among other techniques.

When you have good data, different models and architectures tend to perform well. You are not required to hunt for the perfect hyperparameters to extract maximum capacity. In other words, in model development, the mantra of “garbage in, garbage out,” rings true.

But how can we possibly know if our data collection is good enough? Through error analysis.

You might be familiar with several metrics used to evaluate model performance: ROC-AUC score, precision, recall, accuracy. Even though these metrics are important, as a data scientist and machine learning engineer, you must be aware that your model has to solve real business problems. A scenario where a data scientist has a model with high metrics but a businessperson doesn’t really see how that model solves their business problems is not unheard of.

High Accuracy on the Test Set Isn’t Always Good!

The accuracy score might be the most intuitive metric in machine learning model evaluation. It is the ratio of accurate predictions to the total number of entries in the test set.

However, several other factors must be considered before relying solely on the accuracy score of a model. Examples are:

- Fairness: Your model needs to be fair to all users. A loan approval algorithm should not discriminate based on protected attributes (e.g., age, race, disability, gender, sexual orientation, etc.)

- Skewed Distributions and Rare Classes: If 99% of medical diagnoses are negative, you can build a model that always predicts negative, and it will achieve an accuracy score of 99%. But it would ignore rare conditions, and this would significantly impact the lives of other people, which is unacceptable.

You benefit a lot from using baselines to guide you through model evaluation. Baselines help you identify what is achievable and also help in setting realistic expectations for model performance. Meticulous analyses also allow you to focus on areas with potential for improvements, instead of focusing just where the model performs worst.

Baselines: Unstructured vs. Structured Data

In data science, we constantly deal with two types of data: structured and unstructured. The first consists of spreadsheets and databases, whereas the latter consists of images, audio, and texts. Different approaches are adequate for different types of data:

- Unstructured Data: Humans are very good at these tasks. For this reason, you benefit a lot from comparing model performance with human-level performance (HLP) for understanding and tackling errors.

- Structured Data: Humans have not evolved for these tasks. We are not wired to comprehend massive amounts of numbers in a spreadsheet with thousands of rows. In these cases, you benefit from a literature search to find the state-of-the-art, open-source results, and performance of existing systems as a starting point, if you have one available.

Error Analysis Process

Your first model will likely be imperfect, and error analysis is required to guide you to the most efficient ways to improve it.

Via manual examination, you can listen to or view mislabeled examples from your dataset. You can create categories and tag errors, such as “car noise” in speech recognition, for example, to highlight audio files with loud noises of cars in the environment. You can also use MLOps tools to help in this process.

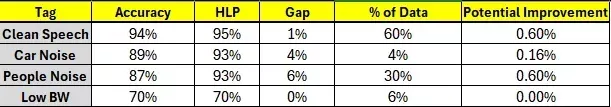

Once you have tagged the errors, you can prioritize what to work on. Consider, for example, the following table for a speech recognition model.

Accuracy is how well the model performs, HLP is how well humans perform, the gap column shows the distance between model and human performances, percentage of data shows the overall presence of that tag within the dataset, and the far-right column, potential improvement, shows how much potential the model has to improve accuracy in that specific tag.

Even though low BW has the worst accuracy of them all, clean speech and people noise are much more prevailing in the dataset, and both have higher potential for improvement. In this case, focusing on trying to improve performance on speech with low bandwidth would be unproductive to model development.

Data-Centric Model Development

At the beginning of my journey, I used to think that the secret lay in the algorithm. All I needed was the right set of hyperparameters or the right architecture. None of that is true. Good data trumps fancy models. Always.

If there’s one thing to remember from this article it is this: garbage in, garbage out.

One of the techniques you can use to make your data better is data augmentation, a systematic way to improve quality and quantity by creating realistic examples to help the model generalize better.

The goal of data augmentation is to expand the training dataset, especially in the case of unstructured data. In speech recognition, you can add background noise to speech examples to simulate a café, for example, enhancing the robustness of the model.

When performing data augmentations, it is good to keep these three keywords in mind: realistic, understandable, and challenging.

- Realistic: The augmented examples should resemble real-world data.

- Understandable: Ensure that humans can still understand the content, such as recognize spoken words in noisy environments.

- Challenging: The algorithm should currently struggle with the augmented data. This indicates potential learning gains.

Overall, good augmentations are those that promote moderate changes, without damaging the object of interest. Horizontal flips in the case of image classification, for example. Bad augmentations are those that destroy both the model and the human ability to recognize the object of interest, such as the extreme darkening of a picture that makes it hard to understand what it portrays.

This process is also highly iterative. You train the model, perform error analysis, perform data augmentation, and repeat the process in search of improvements in performance.

Feature Engineering: Improving Performance with Structured Datasets

In structured data, augmentation is not the answer. Unlike unstructured data, it is challenging to “create” new users or products in a structured dataset. In this case, we focus on enriching the current features used as predictors for the target variable.

Instead of generating new data points, which happens in data augmentation, we enhance the information within the existing examples. To do that effectively, we analyze our features and find ways to make them more informative for the learning algorithm, providing stronger signals for more accurate results.

For instance, if we have a dataset and are working on a model for restaurant recommendations, and we have a feature like “price range” with categories like cheap, moderate, and expensive, we can engineer a more granular feature, such as “average meal price.” This finer-grained information could give the model a better sense of affordability, potentially leading to more targeted and satisfying recommendations.

In Closing

In this article, we explored the importance of high-quality data and how it frequently outweighs model complexity in real-world machine-learning projects. Understanding strengths and weaknesses through error analysis empowers us to make strategic improvements in our data as a means of model improvement, whether via data augmentation or refining existing features. This is the core of the data-centric approach, where we focus on enhancing the input to our models rather than solely obsessing over the models themselves.

While metrics like accuracy, precision, and recall offer valuable insights, it is crucial to remember that machine learning exists to solve business problems. Cultivating a deep understanding of the business needs and potential biases within the data ensures our models deliver tangible value. A high-performing model that fails to align with business goals or perpetuates unfairness offers little to no benefit.

The machine learning project lifecycle is an iterative process. By prioritizing error analysis, data-driven improvements, and clear communication, we dramatically increase the probability of building machine learning systems that are both effective and responsible. The most successful solutions emerge from a collaborative effort to improve data quality, refine models, and align with real-world problems.

In Part 3, we’ll discuss data collection.

Originally published in Artificial Intelligence in Plain English.