I recently enrolled in DeepLearning.AI’s course, Machine Learning Engineering for Production (MLOps). The first lesson of this specialization introduces the machine learning project lifecycle and the approach to the main steps and issues that emerge throughout an ML project.

The course defines MLOps as machine learning plus software development, and it invites students to jump into the journey that happens after training a model in a Jupyter Notebook.

In this article and others to come, I will summarize the lessons I take.

The Machine Learning Project Lifecycle

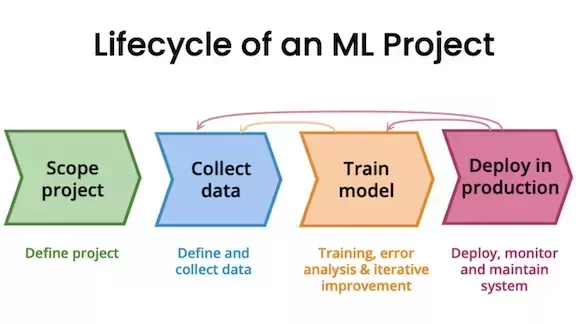

The machine learning project lifecycle has several stages, which are inherently iterative rather than sequential. Much like the cyclical nature of training and testing an AI model, the development process involves frequent revisiting and refinement across different phases.

We can define the different stages of an AI project as follows:

- Scoping: During the scope, you are essentially defining the goal you would like to achieve with the project. In this stage, you define your x—independent variables—and your y—your target variable.

- Data: Once you know the goal you wish to achieve with the project, you must define and collect the necessary data for training and testing the model. There are several options for obtaining labeled and well-structured datasets, both paid or free. You may also need to extract, label, and organize your own data.

- Modeling: The modeling stage is about training and fine-tuning your hyperparameters, plus evaluating if the data is adequate for the project. In the course, Andrew Ng, founder of DeepLearning.AI, mentions the different approaches taken in academia/research and product teams during modeling. Research teams may work with a fixed dataset and focus on optimizing different models and hyperparameters. Product teams might work with a fixed model, focusing on optimizing the hyperparameters and the data. There is no one-size-fits-all here. After training, you evaluate performance through error analysis, which might require you to return to the data to reevaluate if the current dataset is good enough for the project.

- Deployment: After training, testing, and evaluating the model, it is time to deploy it in production. Even though this appears as the last stage in the lifecycle diagram, Andrew Ng says that the first time you deploy the model, you might be just in the middle of the overall process. With deployment comes the need to monitor and maintain the system working as intended. When an issue arises, or an update is necessary, it is the machine learning engineer’s job to return to the modeling and data stages and evaluate the need to retrain the model or update the dataset.

To illustrate the different stages of the lifecycle, we can use speech recognition as a case study.

We start by defining the project, in this case, a voice search mobile app. In this stage, we also decide on the key metrics and estimate the resources and timeline to work on this app.

When dealing with the data, each project might require different metrics to evaluate their quality to the modeling process. For instance, for speech data, you might need to define how much silence you want before and after the speaking. You must also evaluate if you need to normalize the volume across different audio files, as well as the quality of the files. With speech recognition, most datasets might come with a speech-transcription pair, so you should evaluate if the labeling styles are consistent across the transcriptions.

During modeling, you would work with the algorithms or neural networks and evaluate their performance, as well as fine-tune hyperparameters to increase the predictive power of the model. The data should also be reevaluated in this stage and updated if necessary.

You would then deploy the model to a mobile app that would use the user’s microphone to record their requests and a speech API to send their requests to a prediction server, which then returns the transcript of the user’s speech along with the results of the search.

After deploying the model, you focus on monitoring performance. During this stage, issues might arise, such as lower performance due to a user having a foreign accent that the model was not exposed to during training, so it struggles to transcribe the user’s requests. You are then responsible for going back to the database and acquiring all the necessary steps to retrain the model, so it learns to capture the characteristics of that specific accent as well.

Deployment: Key Challenges

Even though deployment is the last stage in the lifecycle diagram, Ng mentions that it is important to start by defining the issues and challenges you could face once you deploy the model. These are data issues and software engineering issues.

After deployment, you must evaluate how the data changes over time. You might deal with gradual changes—which in the case of a speech recognition system would be the emergence of new terms and slang in speech—or sudden shocks. When the COVID-19 pandemic hit, people changed their purchase patterns. Clients who would not buy online started to do so, and this sudden change would trigger fraud detection systems and flag these purchases as fraud.

Overall, we can define the data issues into two categories:

- Data Drift: These are changes in the model’s input data distribution over time, which have not been accounted for during the training phase. One example would be a sudden spike in searches for a celebrity or politician all of a sudden.

- Concept Drift: Occurs when the statistical properties of the target variable change over time. One example would be a user who did not use to buy online but changed their purchase pattern after the COVID-19 pandemic.

The software engineering issues refer to the resources and functionalities of the system. For once, you must decide whether you have a real-time system, which provides immediate responses to the model inference requests in milliseconds to a few seconds, or a batch system, where the model inference is applied to a large volume of data at once, usually overnight, at off-peak hours. Each system comes with its specific demands of frameworks and needs for effective operation.

You also have to decide where the data processing and inference occur. Cloud systems run on remote servers in data centers, allowing for more computing power and resources. Edge/Browser systems run on local devices or within web browsers, which may enhance privacy and ensure that the system works even in limited connectivity environments.

It is also crucial to account for computational resources—GPU, CPU, and memory. In other words, you must ensure that you can run what you train. You also have to ensure the security and privacy of the user’s data.

Deployment Patterns

There are several strategies for deploying a model in production. Since the model has yet to be proven, it is a good idea to have a gradual implementation. We start by giving the model a small amount of traffic and monitoring its performance. If something goes wrong, it is easier to roll back to a previously proven model or system without greater effects due to failures.

Let’s take as an example a system to identify cracks in cellphone screens in a factory. Here are the different deployment strategies and stages to employ:

- Shadow Mode Deployment: In this case, we would have an AI system that shadows the human in identifying cracks in the screens. The model’s outputs are not used for decisions yet, and the purpose is to evaluate the new model on real-world data without affecting the production system.

- Canary Deployment: We gradually shift a small portion of traffic to the model and monitor its outputs. This method helps reduce risk by slowly introducing the model and rolling back if issues arise. In this stage, the model serves more as an AI assistant to humans.

- Blue/Green Deployment: In this case, we have an older model (blue) and a new model (green). Traffic is switched from blue to green once the new version is tested and ready, and it is possible to quickly roll back to the older model if the new model faces issues.

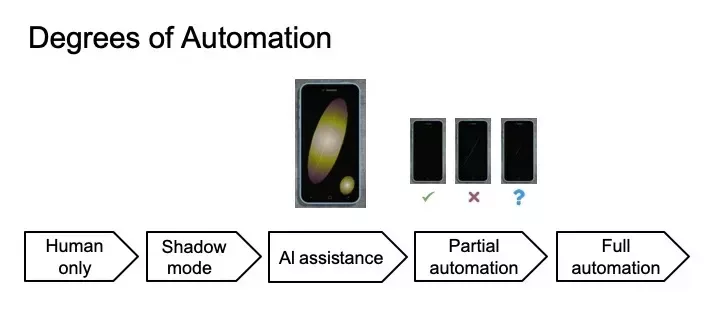

Degrees of Automation

Considering the different characteristics of each project and goal, it is essential to consider the degree of automation of machine learning systems according to the task and the data.

In human-only systems, we do not have any input or output from an AI model, and the task process is entirely in the hands of humans. In shadow mode systems, we have an AI model mimicking the human task, but their outputs do not contribute to the decision-making process. This particular system helps to identify issues early on.

In AI assistance and partial automation systems, we have a higher degree of interaction between humans and AI. For example, he AI assistant approach would guide a human inspector by highlighting regions of interest in identifying cracks and defects in cellphone screens. With partial automation, the model becomes a part of the decision-making process, and the human is only called upon for inspection when the model is not confident in its output. Full automation systems are those where the AI assumes responsibility for the entire decision-making process without human intervention.

These deployment applications span a spectrum, from relying solely on humans to relying on the AI model for decision-making. Each application might require a different degree of automation. For a mobile search app with speech recognition, for example, full automation works better due to scale. In healthcare, it is crucial to have human-in-the-loop deployments, since a mistake caused by an AI could have an enormous impact on human life.

Deployment Is Iterative

As previously mentioned, deploying the model is hardly the last stage in the lifecycle. After deployment, you must monitor the system for both software and data issues.

For software, you monitor memory, computational costs, latency, server load, etc. When it comes to the data, you monitor both the input and output metrics. For speech recognition models, for instance, you must monitor the average length of speech, as well as the average volume of speech, and perform any enhancements as necessary. The output metrics might indicate whether the model is decaying in performance. You can identify this decay when users constantly redo the searches or switch to typing instead of using the speech option.

It is necessary to set different thresholds and metrics to identify when to adapt the system or retrain the model. Understand how fast your data changes due to the nature of the task so you can prepare for the quick adoption of new information. Ng makes note that user data tends to change much more slowly. Think face recognition. Faces don’t change that much over time. But business data, especially factory products, might have a much faster pace of change.

In Closing

In this article, we have explored the intricacies of just one of many stages in the machine learning project lifecycle: deployment. Deploying the model is hardly the final step of the ML project, and it comes with its own challenges, from software to the nature of the data.

It is crucial to understand how changes in the culture and the environment might provoke a decay in model performance, either slowly or suddenly, and be ready to promote changes as quickly as possible to avoid negative impacts on production.

The degree of automation is also a relevant aspect of the project. Although AI systems can autonomously perform some tasks, human input is still crucial across many industries and projects.

The next article in this series will explore selecting and training models.

Originally published in Artificial Intelligence in Plain English.