In our series, "Accelerating Your Data Innovation Journey," we've delved into healthcare analytics, fostering startup-like teams with an entrepreneurial spirit, and creating an enterprise MAP (Maturity Acceleration Plan) to guide your journey and showcase value. Now, we'll explore the data, analytics and AI platform (analytics platform for short) – the driving force behind innovation within our operating model and community.

As mentioned in our first post in this series, “Accelerating Your Data Innovation Journey in the Healthcare Industry,” organizations and operating models are often constrained or liberated by the technology architecture that supports them. These days, organizations often have an enterprise data warehouse (EDW) and a variety of additional data environments that have sprung up over the years. Over time, these environments can grow complex, scattered, and outdated, resulting in higher maintenance costs and a requirement for scaling assistance to align with the enterprise's strategic and operational goals.

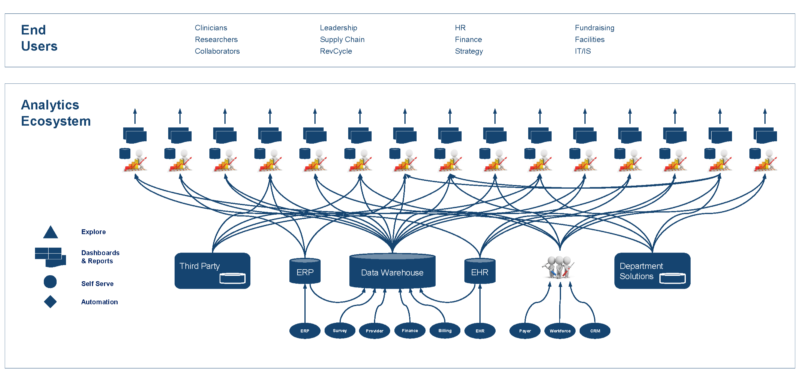

What does your analytics platform consist of today? Take stock of all you have – creating a visual can be helpful. As described above, departmental solutions can be costly and prohibitive to scale to an enterprise solution. Seek out these solutions first and understand what they exist to accomplish. There are likely opportunities to decrease redundancy, establish a single version of truth, centralize for broader enterprise access instead of confined departmental access, and integrate for more profound exploration, advanced analytics, and automation. The goal is to “evolve to a resilient, scalable, and extensible data and analytics platform that looks like it was built by a single team — one that doesn’t look like a ‘Frankenstein’” (see “Operating Like a Startup”).

The Enterprise Data Lakehouse

An enterprise-level analytics platform goes beyond the typical database. The data warehouse concept has existed since the late 1980s and became a fundamental analytics component in the 1990s. Large volumes of structured data can be stored, organized, and optimized in a data warehouse with data marts to support analytics. This reference architecture was introduced and popularized by the book Corporate Information Factory by Bill Inmon and Ryan Sousa.

Data lakes were introduced as a concept as early as 2008 and popularized in a blog written in 2010 by James Dixon, the Chief Technology Officer at Pentaho (now part of Hitachi Vantara). To illustrate the difference between a data lake and a data mart or data warehouse, James wrote:

If you think of a data mart [in a data warehouse] as a store of bottled water – cleansed and packaged and structured for easy consumption – the data lake is a large body of water in a more natural state. The contents of the data lake stream in from a source to fill the lake, and various users of the lake can come to examine, dive in, or take samples.

Recently, the concept of a data lakehouse has emerged, which combines the best features of the data lake and data warehouse. The enterprise data lakehouse will accommodate and support the analysis of large volumes of both structured and unstructured data. It will hold vast amounts of raw, unprocessed data from various sources, allowing access to traditional and advanced analytics. As it can support the data in its original structure and format, it is a flexible, cost-effective solution for storing data. The advantage of the lakehouse is that it is an approach to bridge the gap between the flexibility of data lakes and the performance of the data warehouse as it will also contain structured, processed, and organized database structures that will support quick and efficient access to data for analytical queries. We like the concept of a virtual or even physical data lakehouse, which encompasses both data lake and data warehouse environments, even if they are not on the same technology platforms.

If your analytics team does not currently have a lakehouse and you are looking to build or buy one, several technologies and platforms can help you achieve your goals. Here are some options to consider (we know we may have missed some, and we apologize for that):

- Cloud-Hosted Data Lakehouse Solutions: Cloud-based providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer managed data services that make setting up and operating a data lakehouse architecture easier. AWS provides Amazon S3, AWS Glue, Redshift and other platforms/services; Azure offers Azure Data Lake Storage, Azure Data Lake Analytics and Azure Synapse Analytics along with other integrated platforms/ services; while GCP has Google Cloud Storage and BigQuery, which can be used in combination along with other analytic platforms/services in GCP.

- Open Source Data Lakes: Apache Hadoop is an open-source framework that can be used to build a data lake. It includes components like Hadoop Distributed File System (HDFS) for storage, and Apache Hive or Apache Spark for data processing and analytics.

- Specialized Data Platforms: Cloudera offers a comprehensive platform that can be deployed on-premise and on cloud for data management and analytics. Databricks is a cloud-based collaborative analytics platform built on Apache Spark for big data and machine learning.

- NoSQL Databases: NoSQL databases like MongoDB, Cassandra, or Couchbase can be part of a data lake architecture to store and manage unstructured and semi-structured data. These platforms align well with use cases that require high availability and near-time processing (e.g., master data management).

- Data Warehouse Platforms: High-performance data warehouse platforms that integrate with data lake environments include solutions like Snowflake, Yellowbrick and IBM Netezza Performance Server. Snowflake and Yellowbrick are designed to run on AWS, Azure and GCP. IBM Netezza Performance Server is available as a SaaS on AWS, Azure and IBM Cloud.

- Data Integration Tools: Solutions like Apache NiFi, Talend, IBM Data Stage or Informatica can help with data routing, transformation, and data movement between systems and data ingestion, transformation, and loading into the lake house.

- Data Cataloging Tools: Implementing a data cataloging tool like Apache Atlas, Talend Data Catalog, Ataccama ONE, IBM InfoSphere Information Governance Catalog, Informatica Axon, Collibra or Alation can help with data discovery, governance, and metadata management.

- Data Virtualization Platforms: Data virtualization tools like Denodo or Cisco Data Virtualization can provide a unified view of data from various sources.

- Data Governance and Security Tools for Data Lake: To ensure proper data governance and security, you can consider tools like Apache Ranger, AWS IAM, or Azure Active Directory.

Many application vendors aim to market their analytics platform as an enterprise solution, particularly well-suited for delivering operational reporting to bolster clinical operations, clinical research, finance, supply chain, HR, and more. However, this is only part of your analytics platform architecture. To address the strategic needs of the enterprise, you will need a trusted, single version of truth that integrates data across these environments to a platform that supports analytics and AI at a massive scale using structured and unstructured data that is nimble, adaptable, highly secure and cost-effective.

Now, if you are just getting started, need to build credibility, and funding is limited, integrating data from additional sources into your primary application database is possible and even desirable – we’ve been here more than a few times. However, it's crucial to consistently bear in mind that this marks just the initial phase of your journey. If not managed prudently, the scenario that can unfold, if it hasn't already, involves multiple teams creating distinct application-specific "data warehouses." Instead of achieving a unified source of truth, you could find yourself with an array of disconnected "data warehouses," resorting to Excel spreadsheets for integration. So, having a high-level architecture for how these different solutions fit together to form a single analytics platform is essential before starting this journey.

Building the Analytics Platform

Before we build or update, let us clean house to streamline, simplify, consolidate, and optimize our environment so we avoid that Frankenstein! This brings to mind the five Ss from Toyota’s lean methodologies:

- Sort: Go through and keep only whaat is needed

- Straighten (orderliness): Arrange everything so it’s easy to find

- Shine (cleanliness): High quality

- Standardize (create rules): Organizational definitions, one business owner, simplify

- Sustain (self-discipline): Maintain going forward with consistency across delivery teams as they all follow the same framework

Next, think about where to host an enterprise data lake – with the ability to land structured and unstructured data. From there, how do you easily extract, transform and load the data into a relational database on-prem or in the cloud? And how soon do you anticipate your environment changing from prem to cloud or from one technology to another?

How will your developers and self-service users access the data when you build your data marts? What tools can connect and does the data need to be organized or optimized for reporting tools outside the environment? Can tools used to access live streaming data from the bedside co-exist beside batch Extract Transform Load (ETL) tools? Can data science tools connect and can you build containers with models to train for deeper insight into what is coming or what to prescribe for your business/clinical partners?

How many source systems do you intend to integrate? (Hint: over 100 is not a stretch in healthcare.) Do you require significant additional storage for tasks such as storing snapshots in time, archiving legacy source system data that remains unconverted, and preserving access to query history? Plan for development, test, and production environments at the minimum; a release environment is not a bad idea to test upgrades. Maintain a disaster recovery/failover copy of everything – the approach of transitioning everyone seamlessly to the failover copy and enabling them to resume their tasks after a brief interruption, while the system is reconfigured to the disaster recovery version, proves more efficient than having them wait during recovery and potential reconstruction efforts.

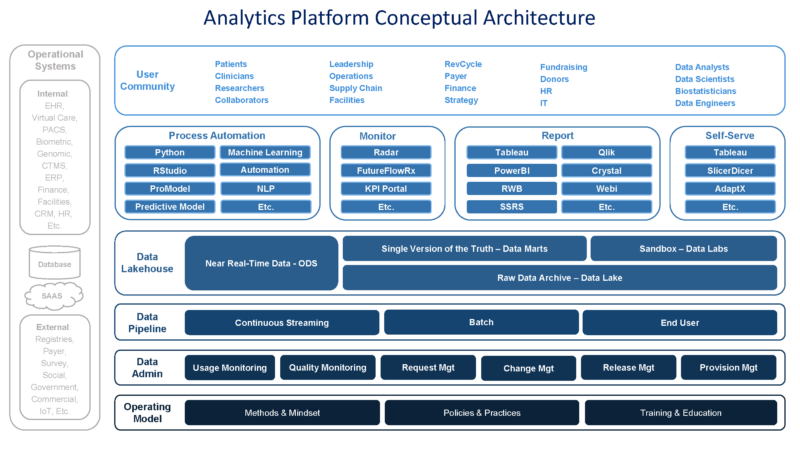

These are a sample of questions that help to inform the shape and structure of your conceptual architecture. I am sure you can think of others. When you find the sweet spot, it serves as a tool that helps your business partners in comprehending the solutions you're constructing to fulfill their requirements. Concurrently, it functions as a tool that allows your delivery teams—engineers, analysts, scientists, and others—to perceive how their efforts contribute to expanding the analytics platform and where they can leverage from their peers. Stacking hands on an illustration like the one below is an essential step in ensuring that the community is operating in unity to create a single analytics platform that looks like it was built by a single team – and not a Frankenstein.

To further achieve the goal of evolving a single analytics platform, it’s essential for analytics teams to assess their requirements, existing infrastructure, budget constraints, and scalability needs before choosing a specific technology. Also, it is essential to consider factors like ease of integration with existing systems, support for data formats, data processing capabilities, and ease of data access for analytics and reporting. Consulting with data architects or experts can be beneficial in making the right technology choices for your implementation.

Turning Vision into Reality

As discussed in our second installment in this series, “Operating Like a Startup,” delivery teams organize around strategic and operational priorities and function independently with a laser focus on the needs of their business partners. So, how do we ensure they deliver on this need but do it in a way that can be leveraged across other delivery teams – always working toward building a single, trusted version of the truth? We’ve discussed the importance of a conceptual architecture in creating a shared vision for what the community is building at a high level. Now, let’s look at how delivery teams turn that vision into a reality and the importance of frameworks.

A small, agile delivery team is best made up of versatile analytic talent where team members are few in number and can wear several hats. Though usually, a person best suited for data engineering may not be the best data analyst or data architect, the data engineer must understand the relationships in the data and data modeling. The data analyst can also learn data modeling to partner with the data engineer on design, such as star schemas, encompassing all the essential components for a Subject Matter Area (SMA) or data mart.

Not everyone needs to excel at everything, but purposeful and strategic staffing of a delivery team with critical thinking, problem-solving, and essential analytic/technical skillsets can eliminate the necessity for additional personnel to fulfill roles such as dedicated data architect or project manager. It also helps the team move faster, with fewer handoffs, explanations, and start-up costs for each contributor. This type of delivery team will be able to extract, load, model, transform, optimize, and validate an SMA partnership or to meet the requirements of their business or clinical partners quickly and efficiently.

To achieve success, the delivery teams must operate as a community, and evolve a resilient, scalable, and extensible data and analytics platform that looks like it was built by a single team. Some delivery team responsibilities include:

- Own data stewardship for selected data domains

- Promote reuse across delivery teams

- Contribute to tool assessments

- Promote data fluency

The Federated operating model operates as a unified community while enabling delivery teams to be integrated within the service areas they support. Every delivery team has the capacity and should contribute to the central data repositories, which encompass unstructured or structured data repositories like an enterprise data lake, structured databases, data marts, or labs. Additionally, they can construct extracts and APIs (Application Programming Interface) to transmit data to source or downstream systems, all within a tightly integrated framework.

Follow a Tightly Integrated Framework

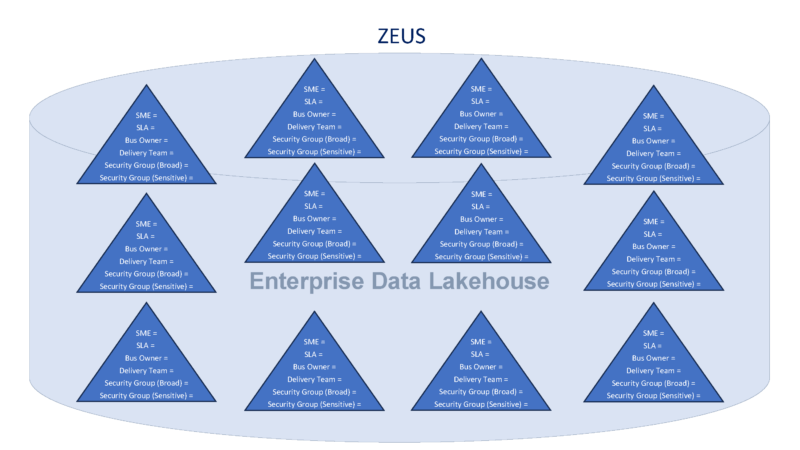

We’ll describe such a framework and call it Zeus. Zeus exists for multiple reasons, including the ease of managing security by defining one business owner for each SMA to help with data governance and change management. Each SMA in a Zeus framework is assigned a designated business owner, a dedicated delivery team accountable for construction and upkeep, an inclusive security group facilitating self-service to the 'open' schema, and a more restricted security group governing access to sensitive data within a highly secured schema. Additionally, a service level agreement dictates the data refresh frequency, specific times of day, and outlines the support level in the event of a failure. For instance, platinum status triggers immediate notifications upon failure (day or night), gold aims for resolution by the end of day, and silver before month-end.

Zeus aligns every team with common design strategies, from the enterprise data lake and staging databases to the star schema data marts, labs, and everything in between, such as a data refinery. The key rule of thumb is that every database, container, or node has one business and one delivery team owner and change is managed through those owners. It is essential to integrate all analytics into a common framework like Zeus to ensure a seamless and cohesive data ecosystem.

It is the frameworks (and automation) that allow the loosely coupled delivery teams to operate as a community and evolve a unified data and analytics platform. We will discuss other frameworks and automation in future pieces.

In Closing

Accelerating our data innovation journey demands a robust data, analytics, and AI platform informed by a conceptual architecture that aligns and unifies the community. By harnessing the power of advanced analytics tools and technologies, healthcare organizations can revolutionize patient care, clinical research, and operational efficiency. Such a platform empowers healthcare professionals to extract actionable insights from vast amounts of healthcare data, leading to improved diagnoses, personalized treatment plans, and better patient outcomes. As we embark on this transformative path, we can make informed decisions that positively impact public health, optimize resource allocation, and ultimately create a healthier future for all. In future pieces, we will go into more detail on developing a well-formed conceptual architecture and explore other frameworks that delivery teams use to align and streamline delivery.

Now that we have talked about the elements of your data, analytics and AI ecosystem (operating model, entrepreneurial mindset, and analytics platform), and how to start the journey and navigate the evolution using a MAP, we will discuss the evolution of the ecosystem, how healthcare systems are using it to deliver value, and explore future trends.